All Assignment Problems for Cohort 1¶

Future Problems¶

anything from here that we didn't cover: https://catonmat.net/summary-of-mit-introduction-to-algorithms

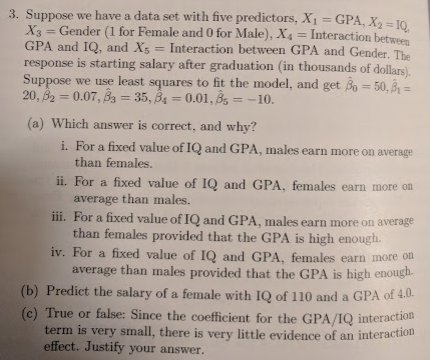

stimulated hodgkin huxley --> network of hodgkin huxley neurons --> neural net

weighted graph, optimization algos (knapsack, linear programming, randomized hill climbing, simulated annealing, genetic algorithms)

oscrank competition -- who can find the diff eq parameters to give the most and greatest oscillations? Catch is that you have to do it algorithmically

RL + games (tic tac toe, checkers, snake, …)

Future Problem¶

Solving a maze by representing each “fork in the road” as node in a graph

Future Problem¶

Order statistics (divide and conquer) https://catonmat.net/mit-introduction-to-algorithms-part-four

Future Problem¶

a. (20 points) Integrate your strategy player into your game. Your strategy player and game need to follow the specifications below, so that you'll be able to run the strategy players of your classmates as well.

- asdf

b. (10 points) Write a test tests/test_strategy_player.py that runs a game with your strategy player against the dumb player and verifies that your strategy player wins.

Future Problem¶

Rewrite your DumbPlayer so that it uses the following specifications:

same methods as your custom player

game does the actual movement of players

should still pass the original tests

Future Problem¶

Bayesian analysis: how many people need to get covid vaccine before you're sure that the probability of adverse event is lower than probability of death from covid? (First, the case when there are no adverse events. Then, the case when there are few adverse events.)

Future Problem¶

Multinomial logistic regression

Future Problem¶

State and justify a recurrence equation for the time complexity of merge sort on a list of $n$ elements. Then, use it to derive the time complexity of merge sort on a list of $n$ elements.

Future Problem¶

Plotting capability in dataframe -- scatter plot, line plot, histogram, etc

Future Problem¶

Hebbian learning

Future Problem¶

10-neuron HH network -- raster plots

Future Problem¶

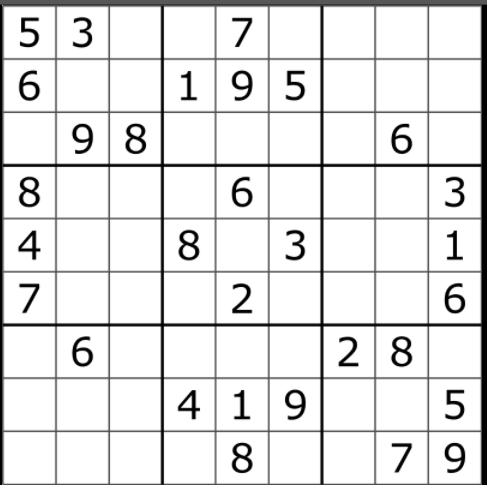

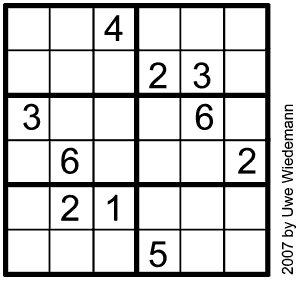

Use "intelligent search" to solve the following sudoku puzzle. For a refresher on "intelligent search", see problem 44-1.

Format your output so that when your code prints out the result, it prints out the result in the shape of a sudoku puzzle:

-------------------------

| 5 3 . | . 7 . | . . . |

| 6 . . | 1 9 5 | . . . |

| . 9 8 | . . . | . 6 . |

-------------------------

| 8 . . | . 6 . | . . 3 |

| 4 . . | 8 . 3 | . . 1 |

| 7 . . | . 2 . | . . 6 |

-------------------------

| . 6 . | . . . | 2 8 . |

| . . . | 4 1 9 | . . 5 |

| . . . | . 8 . | . 7 9 |

-------------------------Future Problem¶

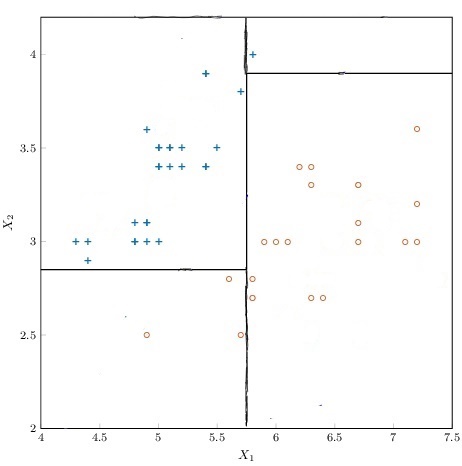

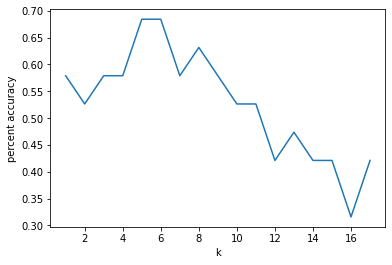

use kNN to predict acceptance on sample college admissions dataset; compare with results from logistic regressor

Future Problem¶

Download sports prediction data (or movie prediction data), clean it up, make predictions using linear regression / logistic regression / Decision tree / random forest

Future Problem¶

boosting

Future Problem¶

KMeans class

Future Problem¶

5-neuron HH network on a graph

Future Problem¶

KMeans clustering by hand

Future Problem¶

DataFrame - left joins

Future Problem¶

Location: simulation/analysis/hebbian_learning.py

Grading: 10 points

Spike-timing dependent plasticity -- causal updates

$$\begin{align*} \dfrac{\textrm d w_{\textrm{pre},\textrm{post}}}{\textrm dt} &= \int_{\delta}^{-\delta} V_\textrm{post}(t) V_\textrm{pre}(t - \tau) \cdot \tau e^{-|\tau / 10 \, \textrm{ms}|} \, \textrm d\tau \end{align*}$$Future Problem¶

Location: simulation/analysis/hebbian_learning.py

Grading: 10 points

Update weight from presynaptic neuron to postsynaptic neuron.

Hebbian weight updates: fire together, wire together

$$ \dfrac{\textrm d w_{\textrm{pre},\textrm{post}}}{\textrm dt} = \int_{\delta}^{-\delta} V_\textrm{post}(t) V_\textrm{pre}(t - \tau) \cdot e^{-|\tau/10\, \textrm{ms}|} \, \textrm d\tau $$Future Problem¶

Location: simulation/analysis/different_network_types.py

Grading: 10 points

Generate raster plots for: small world, scale free, ...

Future Problem¶

PageRank by hand. Later, implement in directed graph.

https://www.coursera.org/lecture/networks-illustrated/pagerank-example-calculation-iSfuF

Future Problem¶

Location: simulation/analysis/10-neuron-network.py

Grading: 10 points

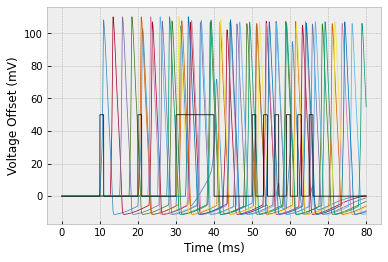

a. Simulate a 10-neuron network with connectivity $1 \to 2 \to 3 \to \cdots \to 10.$ You should get the following result:

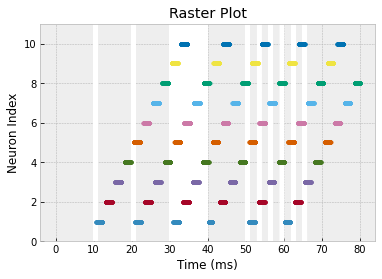

b. The plot above is really hard to interpret! So let's plot it a way that makes the most important information more clear. The most important information is when the neurons spiked. We'll say that a spike occurs whenever $V > 50 \, \textrm{mV}.$

To clearly display the spikes, let's change the $y$-axis to the neuron index and make a dot whenever $V > 50 \, \textrm{mV}.$ To show the electrode stimuli, let's color the plot white whenever the electrode is on.

To get the white background, you can plot a function that alternates between $0$ and $\textrm{max_neuron_index}+1$ whenever the electrode is on.

To plot dots, just pass

'.'as a parameter. To restrict the $y$-limits of the plot, useplt.ylim(min_y, max_y).

You should get the following result:

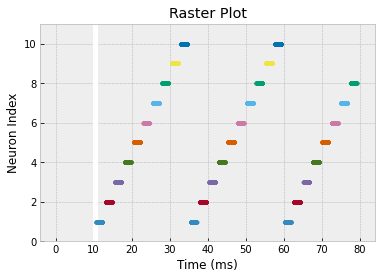

c. Find a network configuration that yields the following raster plot. Note that the stimulus has been changed so that it is just a single pulse at the beginning.

Future Problem¶

KFoldCrossValidator class - should work with kNearestNeighbors

Future Problem¶

PageRank

Future Problem¶

Constructing small-world & scale-free networks

Future Problem¶

neural net

Future Problem¶

NOTE: NEED TO MODIFY EULERESTIMATOR TO MAKE THIS EASIER TO IMPLEMENT

- what variables do we need to store, and what values of them do we need to store?

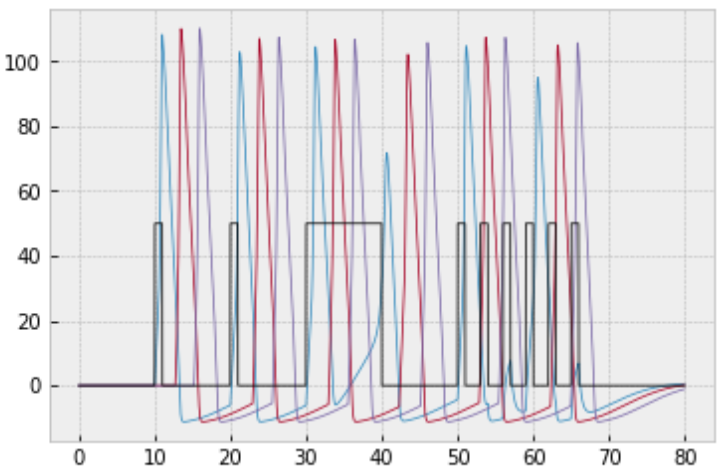

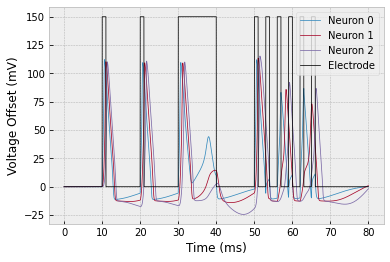

When a neuron fires, the action potential does not reach the synapse instantaneously. There is a small delay depending on how far away the synapse is. Let's say the delay is about $2 \, \textrm{ms}.$ Then we have

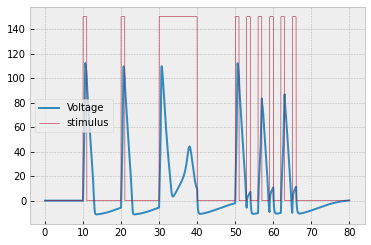

$$\dfrac{\textrm dV}{\textrm dt} = \underbrace{\dfrac{1}{C} \left[ s(t) - I_{\text{Na}}(t) - I_{\text K}(t) - I_{\text L}(t) \right]}_\text{neuron in isolation} + \underbrace{\dfrac{1}{C} \left( \sum\limits_{\begin{matrix} \textrm{synapses from} \\ \textrm{other neurons} \\ \textrm{with } V(t-2) > 50 \end{matrix}} V_{\text{other neuron}}(t-2) \right)}_\text{interactions with other neurons}.$$Taking the above changes into account, simulate 3 neurons connected in the fashion $1 \to 2 \to 3,$ where neuron $1$ receives the following electrode stimulus: $$ s(t) = \begin{cases} 50, & t \in [10,11] \cup [20,21] \cup [30,40] \cup [50,51] \cup [53,54] \\ & \phantom{t \in [} \cup [56,57] \cup [59,60] \cup [62,63] \cup [65,66] \\ 0 & \text{otherwise} \end{cases} $$

You should get the following result:

Future Problem¶

AND translate your pseudocode for your strategy into an actual strategy class for your strategy

Future Problem¶

SA, RHC, GA on 8 queens, TSP, knapsack

Future Problem¶

Simulated annealing & RHC on knapsack problem

Future Problem¶

Simulated annealing & RHC on TSP

Future Problem¶

simulated annealing; compare with RHC on 8 queens

Future Problem¶

A* search

Future Problem¶

solving sudoku puzzle using backtracking (the extra credit problem from first semester)

Future Problem¶

min-cut algorithm in weighted graph

Future Problem¶

Kruskal's algorithm in weighted graph

Future Problem¶

Weighted graph class using Dijkstra's algorithm for shortest path method

Future Problem¶

Euler estimator -- keep track of lag variables

generalize the idea of points -- a point takes the form point[variable_name][num_lookback_steps], where each component has a max number of lookback steps (defined upon intialization). Then we can reference in our derivatives: x['voltage1'][2/step_size] for delay; x['voltage2'][0] for no delay

Here, you will implement this and make sure it works with your SIR model. The new SIR model will assume it takes 1 week before it's possible to recover from the disease.

def dS_dt(t, x, step_size):

susceptible = x['susceptible'][0]

infected = x['infected'][0]

return -0.0003 * susceptible * infected

def dI_dt(t, x, step_size):

lookback_steps = round((1/52)/step_size)

susceptible = x['susceptible'][0]

infected = x['infected'][0]

if len(x['infected']) > lookback_steps:

infected_lookback = x['infected'][lookback_steps]

else:

infected_lookback = 0

return 0.0003 * susceptible * infected - 0.02 * infected_lookback

def dR_dt(t, x, step_size):

lookback_steps = round((1/52)/step_size)

if len(x['infected']) > lookback_steps:

infected_lookback = x['infected'][lookback_steps]

else:

infected_lookback = 0

return 0.02 * infected_lookback

derivatives = {

'susceptible': dS_dt,

'infected': dI_dt,

'recovered': dR_dt

}

max_lookback_times = {

'susceptible': 0,

'infected': 1/52,

'recovered': 0

}

starting_point = (0, {'susceptible': [1000], 'infected': [1], 'recovered': [0]}) # NOTE THAT THE VALUES ARE NOW ARRAYS

estimator = EulerEstimator(derivatives, starting_point, max_lookback_times)make a plot with 1 week minimum recovery period vs possible immediate recovery.

Future Problem¶

random forest

Future Problem¶

Random split decision tree

Future Problem¶

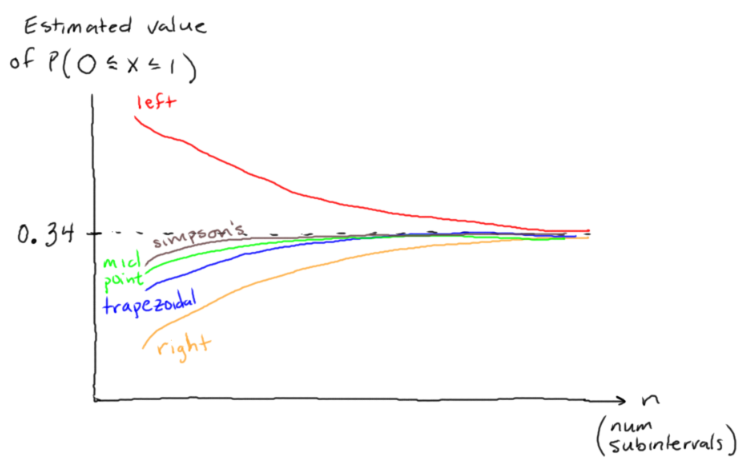

Normal distribution - estimate probabilities using left/right Riemann sums, midpoint rule, trapezoidal rule, Simpson's method

Future Problem¶

tables.query("""

SELECT columnName1, columnName2, ..., columnNameN

FROM tableName

WHERE

condition1 AND

condition2 OR

condition3 AND

...

conditionM

ORDER BY

columnNameA ASC

columnNameB DES

columnNameC ASC

...

""")Future Problem¶

write SQL-like query language from scratch on dictionary of dataframes

first task:

tables.query("""

SELECT columnName1, columnName2, ..., columnNameN

FROM tableName

ORDER BY

columnNameA ASC

columnNameB DES

columnNameC ASC

...

""")Test (use Kaggle toy dataset)

df = DataFrame(

...

)

sql = SQLWorkspace({

'words': words_df

})

sql.queryFuture Problem¶

DataFrame.from_csv(filename)

Future Problem¶

Supplementary problems -- have C&M catch up on sqlzoo and probability for next assignment, so that both classes can share supplementary problems.

Make progress on these each time we don't have quiz corrections. Take screenshot of success, and paste your code into overleaf. Do 3 problems of each category. Synchronize it between classes (c&m can catch up in a day while ML does game refactoring)

- https://www.hackerrank.com/domains/cpp

- https://www.hackerrank.com/domains/shell

- https://sqlzoo.net/ (left off module 3 question 11)

I'll come up with some haskell problems to go along with the reading; start out here:

- http://learnyouahaskell.com/starting-out; put on repl.it (https://repl.it/new/haskell)

Include pics of problems - 2 each time (each from different chapter)

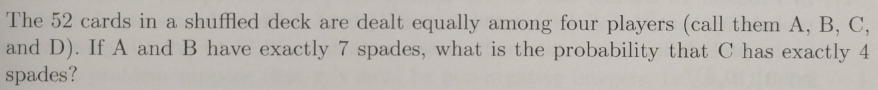

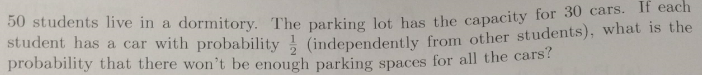

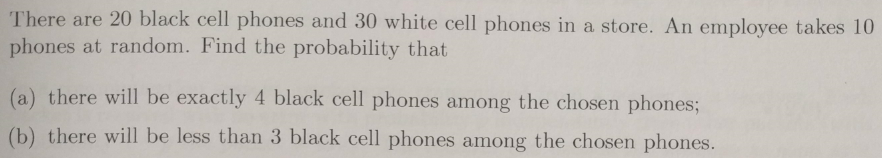

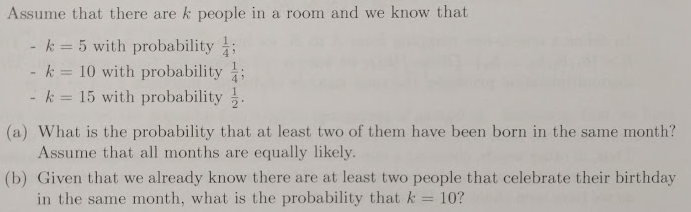

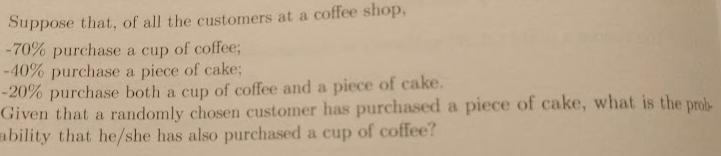

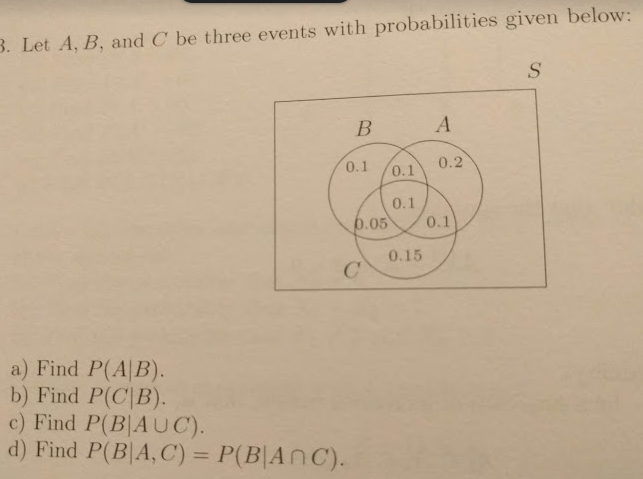

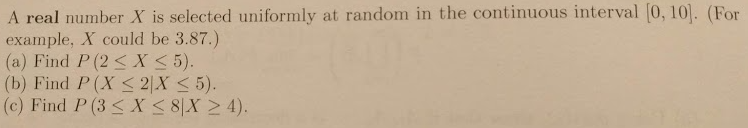

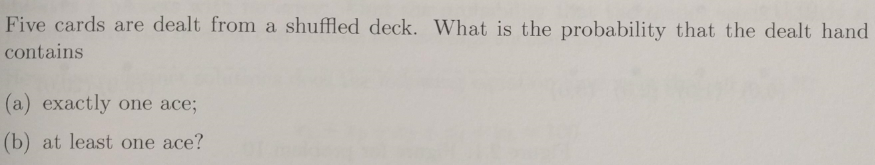

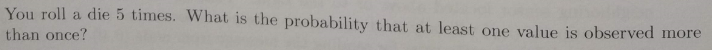

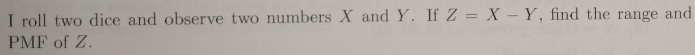

- Probability / statistics

- Operations research

Future Problem¶

asdf

Future Problem¶

Estimated Time: 20 minutes

Grade Weighting: 20%

Complete your code review. Submit links to the issues that you made. See the new code review procedure here: https://www.eurisko.us/resources/#code-reviews

Resolve 1 issue that has been made on your own code.

Future Problem¶

Exchange dumbplayer/combatplayer with classmates and make sure tests still pass. If any test doesn't pass, figure out whether it's your game's fault or the classmate's strategy's fault. If it's your game's fault, fix it.

Future Problem¶

Future Problem¶

Regular problem in which we take a dataset and do a prediction task using all the models we've built

Future Problem¶

single-layer perceptron

Future Problem¶

Random forest improvements

do it with a messier / more high-dimensional dataset

each tree only selects a subset of features

Future Problem¶

Write another test for decision tree with MANY features, much depth (?)

Future Problem¶

machine-learning/analysis/8_queens_hill_climbing.py

Solve the 8-queens problem using hill climbing, randomized hill climbing, and simulated annealing

Objective function: number of pairs of queens that are on same row, column, or diagonal

initialize randomly; move 1 queen 1 space

Randomized hill climbing: Repeatedly select a neighbor at random, and decides (based on the amount of improvement in that neighbor) whether to move to that neighbor or to examine another. For our purposes, use threshold = increase objective function by 2.

Random-restart hill climbing (aka "shotgun" hill climbing) - It iteratively does hill-climbing, each time with a random initial condition $x_{0}.$ The best $x_{m}$ is kept: if a new run of hill climbing produces a better $x_{m}$ than the stored state, it replaces the stored state.

run each 100 times; compute average running time; compute average ending value of the objective function; compare. Write up result in overleaf.

Plot each trial on same graph; really thin lines so all 300 can be overlaid

Future Problem¶

Finally, we'll refactor our random forest into a proper random forest

now that the trees are faster, we can afford to bring back gini. But we don't want to force

- random decision tree should select random feature, but we should still use the gini metric to find the best split in the randomly selected set of columns

put kNN and naive bayes and linear regressor in the comparison as well

Future Problem¶

random-restart hill climbing

simulated annealing

hash table

A* search

Dijkstra's algorithm

Future Problem¶

b. Create [YourNameHere]Player in [your_name_here]_player.py. In tests/test_[your_name_here]_player.py, ensure that your custom player beats both DumbPlayer and CombatPlayer.

On Wednesday's assignment, we'll conduct a practice tournament for everyone's players to compete.

- If you want to prepare your player for the tournament, you can conduct practice battles against your classmates over the weekend.

On Friday's assignment, we'll have the real first tournament

- The winner of the tournament will get an extra 20% on the assignment, and 2nd place will get an extra 10%.

Over the weekend, write up about how your strategy works and what kinds of problems you want to solve

Future Problem¶

Echo server

Run your analysis from 77-1 again, using max_depth=3 for all your decision trees. Post your results on #results, and state how long it took you to train the models now that you're using the max_depth setting.

b. test cases for random forest

Future Problem¶

socket connections -- build calculator

Future Problem¶

Create your custom strategy. Here is an example strategy that (I think) is really simple and doesn't do anything too dumb. Make sure that your custom strategy defeats it.

class ExampleStrategy:

# buys as many scouts as possible and sends them directly

# towards the opponent homeworld

def __init__(self, player_index):

self.player_index = player_index

def will_colonize_planet(self, coordinates, hidden_game_state):

return True

def decide_ship_movement(self, unit_index, hidden_game_state):

myself = hidden_game_state['players'][self.player_index]

opponent_index = 1 - self.player_index

opponent = hidden_game_state['players'][opponent_index]

unit = myself['units'][unit_index]

x_unit, y_unit = unit['coords']

x_opp, y_opp = opponent['home_coords']

translations = [(0,0), (1,0), (-1,0), (0,1), (0,-1)]

best_translation = (0,0)

smallest_distance_to_opponent = 999999999999

for translation in translations:

delta_x, delta_y = translation

x = x_unit + delta_x

y = x_unit + delta_y

dist = abs(x - x_opp) + abs(y - y_opp)

if dist < smallest_distance_to_opponent:

best_translation = translation

smallest_distance_to_opponent = dist

return best_translation

def decide_purchases(self, hidden_game_state):

myself = hidden_game_state['players'][self.player_index]

cp = myself['cp']

homeworld_coords = [unit['coords'] for unit in myself['units'] if unit['type'] == 'Homeworld'][0]

shipsize_tech_level = myself['technology']['shipsize']

hullsize_capacity_per_shipyard = 0.5 + 0.5 * shipsize_tech_level

shipyards_at_homeworld = [unit for unit in myself['units'] if unit['type'] == 'Shipyard' and unit['coords'] == homeworld_coords]

hullsize_capacity = hullsize_capacity_per_shipyard * len(shipyards_at_homeworld)

scout_data = hidden_game_state['unit_data']['Scout']

scout_cost = scout_data['cp_cost']

scout_hullsize = scout_data['hullsize']

purchases = {'units': [], 'technology': []}

while cp > scout_cost and hullsize_capacity > scout_hullsize:

pair = ('Scout', homeworld_coords)

purchases['units'].append(pair)

return purchases

def decide_removal(self, hidden_game_state):

# remove unit that's furthest from enemy

myself = hidden_game_state['players'][self.player_index]

opponent_index = 1 - player_index

opponent = hidden_game_state['players'][opponent_index]

x_opp, y_opp = opponent['home_coords']

furthest_unit_index = 0

furthest_distance_to_opponent = 999999999999

for unit_index, unit in enumerate(myself['units']):

x_unit, y_unit = unit['coords']

dist = abs(x_unit - x_opp) + abs(y_unit - y_opp)

if dist > furthest_distance_to_opponent:

furthest_unit_index = unit_index

furthest_distance_to_opponent = dist

return furthest_unit_index

def decide_which_unit_to_attack(self, hidden_game_state_for_combat, combat_state, coords, attacker_index):

# attack opponent's first ship in combat order

combat_order = combat_state[coords]

player_indices = [unit['player_index'] for unit in combat_order]

opponent_index = 1 - self.player_index

for combat_index, unit in enumerate(combat_order):

if unit['player_index'] == opponent_index:

return combat_index

def decide_which_units_to_screen(self, hidden_game_state_for_combat, combat_state, coords):

combat_order = combat_state[coords]

opponent_index = 1 - self.player_index

player_indices = [unit['player_index'] for unit in combat_order]

num_own_ships = len([n for n in player_indices if n == self.player_index])

num_opponent_ships = len([n for n in player_indices if n == opponent_index])

max_num_to_screen = max(0, num_own_ships - num_opponent_ships)

indices_of_own_ships = [i for i,unit in enumerate(combat_order) if unit['player_index'] == self.player_index]

return indices_of_own_ships[-max_num_to_screen:]Future Problem¶

https://www.learncpp.com/ -- start with ch9

Future Problem¶

Fix this problem to use vectors

Implementation instructions:

Even if you know a more mathematically elegant way to implement this function, please do it according to the following specifications. The goal is to give you some practice working with arrays.

Helper functions:

Create a recursive function

kthFibonacciNumber(int k)that computes thekth Fibonacci number.Create a function

kthPartialSum(int k)that creates an

computes the sum of the first k Fibonacci numbers.

Then, in the primary function metaFibonacciSum(int n):

Create an array containing the Fibonacci numbers $a_0, a_1, ..., a_n.$

Create an array containing the partial sums $S_{a_0}, S_{a_1}, ..., S_{a_n}.$

Compute the sum of the partial sums array.

Helpful resource: https://www.learncpp.com/cpp-tutorial/arrays-and-loops/

Code template:

# include <iostream>

# include <cassert>

int kthFibonacciNumber(int k)

{

// your code here

}

int kthPartialSum(int k)

{

// your code here

}

int metaFibonacciSum(int n)

{

// your code here

}

int main()

{

std::cout << "Testing...\n";

assert(metaFibonacciSum(6) == 74);

std::cout << "Success!";

return 0;

}Debugging note: A failed assert will obliterate any messages that would otherwise be printed out on the same line. So if you want a message to print even in the event of a failed assert, you have to put \n at the end of it so that the assert gets moved to the next line of output.

#include <iostream>

#include <cassert>

int main()

{

std::cout << "This line will print\n";

assert(2+2==5);

}#include <iostream>

#include <cassert>

int main()

{

std::cout << "This line will NOT print";

assert(2+2==5);

}Future Problem¶

strategy problem writeup; prepare for meeting with Prof. Wierman

8 queens -- random restart hill climbing

game level 3 implementation

randomized hill climbing applied to game level 3 (too many possibilities to simulate them all)

Future Problem¶

neural net with hidden layer and rectified linear units (ReLUs)

Future Problem¶

generalize neural net to any number of inputs & any number of outputs

then fit the sandwich dataset with linear and logistic neural nets

extend hash table

Game level 4

- Colonization, with 2 planets in middle of board but on opposite sides

Future:

- other ship types, miners, scuttling, screening

Future Problem¶

Intro to linear programming

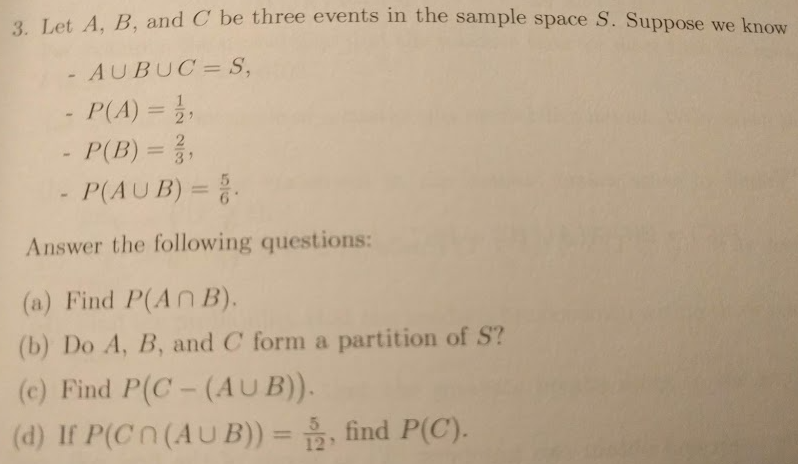

Problem 97-1¶

This problem is the beginning of some more involved machine learning tasks. To ease the transition, this will be the only problem on this assignment.

This problem is just as important as space empires and neural nets, and the modeling techniques covered will 100% be on future quizzes and the final. Be sure to do this problem well. If you've run into any issues with your space empires simulations, DO THIS PROBLEM FIRST before you go back to space empires.

Make an account on Kaggle.com so that we can walk through a Titanic prediction task.

Go to https://www.kaggle.com/c/titanic/data, scroll down to the bottom, and click "download all". You'll get a zip file called

titanic.zip.Upload

titanic.zipintomachine-learning/datasets/titanic/. Then, rununzip machine-learning/datasets/titanic/titanic.zipin the command line to unzip the file.This gives us 3 files:

train.csv,test.csv, andgender_submission.csv. The filetrain.csvcontains data about a bunch of passengers along with whether or not they survived. Our goal is to usetrain.csvto build a model that will predict the outcome of passengers intest.csv(for which the survival data is not given).IMPORTANT: To prevent confusion, rename

train.csvtodataset_of_knowns.csv, renametest.csvtounknowns_to_predict.csv, and renamegender_submission.csvtopredictions_from_gender_model.csv.The file

predictions_from_gender_model.csvis an example of predictions from a really, really basic model: if the passenger is female, predict that they survived; if the passenger is male, predicte that they did not survive.

To build a model, we will proceed with the following steps:

Feature Selection - deciding which variables we want in our model. This is usually a subset of the original number of features.

Model Selection - ranking our models from best to worst, based on cross-validation performance. (We'll train each model on half the data, use it to predict the other half of the data, and see how accurate it is.)

Submission - taking our best model, training it on the full

dataset_of_knowns.csv, running it onunknowns_to_predict.csv, generating apredictions.csvfile, and uploading it to Kaggle.com for scoring.

For this problem, you will need to write what you did for each of these steps in an Overleaf doc (kind of like you would in a lab journal). So, open up one now and let's continue.

Feature Engineering¶

In your Overleaf doc, create a section called "Feature Selection". Make a bulleted list of all the features along with your justification for using or not using the feature in your model.

Important: There is a data dictionary at https://www.kaggle.com/c/titanic/data that describes what each feature means.

It will be helpful to look at the actual values of the variables as well. For example, here are the first 5 records in the dataset:

PassengerId,Survived,Pclass,Name,Sex,Age,SibSp,Parch,Ticket,Fare,Cabin,Embarked

1,0,3,"Braund, Mr. Owen Harris",male,22,1,0,A/5 21171,7.25,,S

2,1,1,"Cumings, Mrs. John Bradley (Florence Briggs Thayer)",female,38,1,0,PC 17599,71.2833,C85,C

3,1,3,"Heikkinen, Miss. Laina",female,26,0,0,STON/O2. 3101282,7.925,,S

4,1,1,"Futrelle, Mrs. Jacques Heath (Lily May Peel)",female,35,1,0,113803,53.1,C123,S

5,0,3,"Allen, Mr. William Henry",male,35,0,0,373450,8.05,,SFor every feature that you decide to keep, give a possible theory for how the feature may help predict whether a passenger survived.

- For example, you should keep the

Agefeature because it's likely that younger passengers were given priority when boarding lifeboats.

For every feature that you decide to remove, explain why it 1) is irrelevant to the prediction task, or 2) would take too long to transform into a worthwhile feature.

- For example, you can remove the

ticketfeature because it's formatted so weirdly (e.g.A/5 21171). It's possible that there may be some information here, but it would take a while to figure out how to turn this into a worthwhile feature that we could actually plug into our model. (There's multiple parts to the ticket number and it's a combination of letters and numbers, so it's not straightforward how to use it.)

Model Selection¶

Split dataset_of_knowns.csv in half, selecting every other row for training and leaving the leftover rows for testing.

Fit several models to the training dataset:

Linear regressor - if output is greater than or equal to 0.5, predict category 1 (survived); if output is less than 0.5, predict category 0 (didn't survive). You may wish to include interaction terms that you think would be important, since linear regressors do not capture interactions by default.

Logistic regressor - same notes as above (for the linear regressor)

Gini decision tree - conveniently, decision trees predict categorical variables by default ("survived" has 2 categories, 0 and 1), and they also capture interactions by default (so don't include any interaction terms when you feed the data into the Gini tree). We'll try out 2 different models, one with

max_depth=5and another withmax_depth=10.Random forest - same notes as above (for the Gini decision tree). We'll try out 2 different models, one with

max_depth=3andnum_trees=1000, and another withmax_depth=5andnum_trees=1000.Naive Bayes - note that you'll need to take any features that are quantitative and re-label their values by categories. By default, you can just use 3 categories: "low", "mid", "high", where the lowest third of the data is re-labeled with the category "low", the highest third of the data is re-labeled with the category "high", and the middle third of the data is re-labeled with the category "mid".

- For example, suppose you had a variable that had values

[1,4,3,3,2,5,7,6,4]. Sorted, these values are[1,2,3,3,4,4,5,6,7]. So we'd relabel1,2,3as"low",5,6,7as"high", and4as"mid". So, the values[1,4,3,3,2,5,7,6,4]would get transformed into["low","mid","low","low","low","high","high","high","mid"]

- For example, suppose you had a variable that had values

k-Nearest Neighbors - for any variables that are quantitative, transform them as $$ x \to \dfrac{x - \min(x)}{\max(x) - \min(x)} $$ so that they fit into the interval

[0,1].For any variables that are categorical, leave them be. Use a "Mahnattan" distance metric (the sum of absolute differences). Note that if a variable is categorical, then their distance between 2 values should be counted as $0$ if they are the same and $1$ if they are different. We'll try 2 different models,k=5andk=10.The reason for the Manhattan distance metric instead of the Euclidean distance metric is so that differences between categorical variables do not drastically overpower differences between quantitative variables.

For example, suppose you had two data points

(0.2, 0.7, "dog", "red")and(0.5, 0.1, "cat", "red"). Then the distance would be as follows:distance = |0.2-0.5| + |0.7-0.1| + int("dog"!="cat") + int("red"!="red") = 0.3 + 0.6 + 1 + 0 = 1.9

Then, use these models to predict survival for the training dataset and the testing dataset separately. Make a table in your Overleaf doc that contains the resulting accuracy rates.

- For example, suppose there are 100 rows in the training dataset and 100 rows in the testing dataset (to be clear, the actual number of rows in your dataset will probably be different). You train your Gini decision tree with

max_depth=10on the training dataset, and then use it to predict on the testing dataset. You get 98 correct predictions on the training dataset and 70 correct predictions on the testing dataset (which, by the way, is an indication that you're overfitting -- yourmax_depthis probably too high). Then your table looks like this:

Model | Training Accuracy | Testing Accuracy

----------------------------------------------------------

Gini depth 10 | 98% | 70%To be clear, your table should have 9 rows, one for each model: linear, logistic, Gini depth 5, Gini depth 10, random forest depth 5, random forest depth 10, naive Bayes, 5-nearest-neighbors, 10-nearest-neighbors.

Submission¶

Take your best model (i.e. the one with the highest testing accuracy) and evaluate its predictions on unknowns_to_predict.csv.

Save your results as predictions.csv, and make sure they follow the exact same format as predictions_from_gender_model.csv.

- By "the exact same format", I mean THE EXACT SAME FORMAT. Make sure the header is exactly the same. Make sure that you're writing the

0's and1s as integers, not strings. Make sure that you include thePassengerIdcolumn. Make sure that the values in thePassengerIdcolumn match up exactly with those inpredictions_from_gender_model.csv. The only thing that should be different is the values in theSurvivedcolumn.

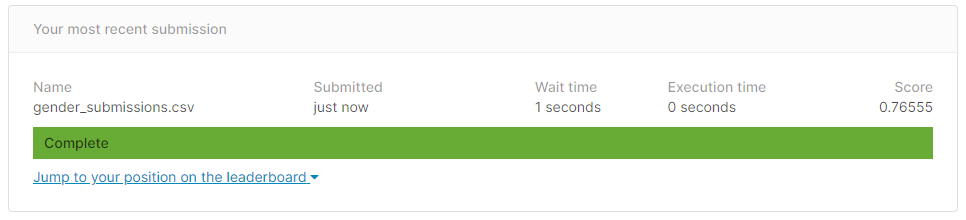

Click on the "Submit Predictions" button on the right side of the screen and submit your file predictions.csv. You should get a screen that looks like the image below, but has your predictions.csv instead of gender-submissions.csv. You should also get a higher score than 0.76555 (which is the baseline accuracy of the gender model).

Take a screenshot of this screen, post it on #machine-learning, and include it in your Overleaf writeup.

What to Turn In¶

Just the Overleaf writeup and a commit link to your machine-learning repo. That's it.

Problem 96-1¶

Space Empires¶

Once your strategy is finalized, Slack it to me and I'll upload it here.

https://github.com/eurisko-us/eurisko-us.github.io/tree/master/files/strategies/cohort-1/level-3

Then, once everyone's strategies are submitted, I'll make an announcement, and you can download the strategies from the above folder and run all pairwise battles for 100 games.

Go through

max_turns=100before declaring a draw. I think this should run quick enough, since we decreased from 500 games to 100 games, but if any 100-game matchups are taking longer than a couple minutes to run, then post about it and we'll figure something out.Put your data in the spreadsheet:

https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Remember to switch the order of the players halfway through the simulation so that each player goes first an equal number of times.

Seed the games: game 1 has seed 1, game 2 has seed 2, and so on. This way, we should all get exactly the same results.

As usual, there will be prizes:

- 1st place: 50 pts extra credit in the assignments category

- 2nd place: 30 pts extra credit in the assignments category

- 3rd place: 10 pts extra credit in the assignments category

Neural Nets¶

Compute $\dfrac{\textrm dE}{\textrm dw_{34}},$ $\dfrac{\textrm dE}{\textrm dw_{24}},$ $\dfrac{\textrm dE}{\textrm dw_{13}},$ $\dfrac{\textrm dE}{\textrm dw_{12}},$ and $\dfrac{\textrm dE}{\textrm dw_{01}}$ for the following network. (It's easiest to do it in that order.) Put your work in an Overleaf doc.

$$ \begin{matrix} & & n_4 \\ & \nearrow & & \nwarrow \\ n_2 & & & & n_3 \\ & \nwarrow & & \nearrow \\ & & n_1 \\ & & \uparrow \\ & & n_0 \\ \end{matrix} $$Show ALL your work! Also, make sure to use the simplest notation possible (for example, instead of writing $f_k(i_k),$ write $a_k$)

Check your answer by substituting the following values:

$$ y_\textrm{actual}=1 \qquad \begin{matrix} a_0 = 2 \\ a_1 = 3 \\ a_2 = 4 \\ a_3 = 5 \\ a_4 = 6 \end{matrix} \qquad \begin{matrix} f_0'(i_0) = 7 \\ f_1'(i_1) = 8 \\ f_2'(i_2) = 9 \\ f_3'(i_3) = 10 \\ f_4'(i_4) = 11 \end{matrix} \qquad \begin{matrix} w_{01} = 12 \\ w_{12} = 13 \\ w_{13} = 14 \\ w_{24} = 15 \\ w_{34} = 16 \end{matrix} $$You should get $$ \dfrac{\textrm dE}{\textrm d w_{34}} = 550, \qquad \dfrac{\textrm dE}{\textrm d w_{24}} = 440, \qquad \dfrac{\textrm dE}{\textrm d w_{13}} = 52800, \qquad \dfrac{\textrm dE}{\textrm d w_{12}} = 44550, \qquad \dfrac{\textrm dE}{\textrm d w_{01}} = 7031200. $$

Problem 96-2¶

Haskell¶

Write a recursive function merge that merges two sorted lists. To do this, you can check the first elements of each list, and make the lesser one the next element, then merge the lists that remain.

merge (x:xs) (y:ys) = if x < y

then _______

else _______

merge [] xs = ____

merge xs [] = ____

main = print(merge [1,2,5,8] [3,4,6,7,10])

-- should return [1,2,3,4,5,6,7,8,10]SQL¶

On sqltest.net, create a sql table by copying the following script:

Then, compute the average assignment score of each student. List the results from highest to lowest, along with the full names of the students.

This is what your output should look like:

fullname avgScore

Ishmael Smith 90.0000

Sylvia Sanchez 86.6667

Kinga Shenko 85.0000

Franklin Walton 80.0000

Harry Ng 78.3333Hint: You'll have to use a join and a group by.

Problem 96-3¶

Commit + Review¶

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.

(You don't have to resolve any issues on this assignment)

Submission Template¶

For your submission, copy and paste your links into the following template:

neural nets overleaf: _____

put your game results in the spreadsheet (but you don't have to paste the link)

Repl.it link to haskell file: _____

sqltest.net link: _____

commits: _____

(space-empires, assignment-problems)

Created issue: _____Problem 95-1¶

Neural Nets¶

Notation

$n_k$ - the $k$th neuron

$a_k$ - the activity of the $k$th neuron

$i_k$ - the input to the $k$th neuron. This is the weighted sum of activities of the parents of $n_k.$ If $n_k$ has no parents, then $i_k$ comes from the data directly.

$f_k$ - the activation function of the $k$th neuron. Note that in general, we have $a_k = f_k(i_k)$

$w_{k \ell}$ - the weight of the connection $n_k \to n_\ell.$ In your code, this is

weights[(k,l)].$E = (y_\textrm{predicted} - y_\textrm{actual})^2$ is the squared error that results from using the neural net to predict the value of the dependent variable, given values of the independent variables

$w_{k \ell} \to w_{k \ell} - \alpha \dfrac{\textrm dE}{\textrm dw_{k\ell}}$ is the gradient descent update, where $\alpha$ is the learning rate

Example

For a simple network $$ \begin{matrix} & & n_2 \\ & \nearrow & & \nwarrow \\ n_0 & & & & n_1,\end{matrix} $$ we have:

$$\begin{align*} y_\textrm{predicted} &= a_2 \\ &= f_2(i_2) \\ &= f_2(w_{02} a_0 + w_{12} a_1) \\ &= f_2(w_{02} f_0(i_0) + w_{12} f_1(i_1) ) \\ \\ \dfrac{\textrm dE}{\textrm dw_{02}} &= \dfrac{\textrm d}{\textrm dw_{02}} \left[ (y_\textrm{predicted} - y_\textrm{actual})^2 \right] \\ &= \dfrac{\textrm d}{\textrm dw_{02}} \left[ (a_2 - y_\textrm{actual})^2 \right] \\ &= 2(a_2 - y_\textrm{actual}) \dfrac{\textrm d}{\textrm dw_{02}} \left[ a_2 - y_\textrm{actual} \right] \\ &= 2(a_2 - y_\textrm{actual}) \dfrac{\textrm d }{\textrm dw_{02}} \left[ a_2 \right] \\ &= 2(a_2 - y_\textrm{actual}) \dfrac{\textrm d }{\textrm dw_{02}} \left[ f_2(i_2) \right] \\ &= 2(a_2 - y_\textrm{actual}) f_2'(i_2) \dfrac{\textrm d }{\textrm dw_{02}} \left[ i_2 \right] \\ &= 2(a_2 - y_\textrm{actual}) f_2'(i_2) \dfrac{\textrm d }{\textrm dw_{02}} \left[ w_{02} a_0 + w_{12} a_1 \right] \\ &= 2(a_2 - y_\textrm{actual}) f_2'(i_2) \dfrac{\textrm d }{\textrm dw_{02}} \left[ w_{02} a_0 + w_{12} a_1 \right] \\ &= 2(a_2 - y_\textrm{actual}) f_2'(i_2) a_0 \\ \\ \dfrac{\textrm dE}{\textrm dw_{12}} &= 2(a_2 - y_\textrm{actual}) f_2'(i_2) a_1 \end{align*}$$THE ACTUAL PROBLEM STATEMENT

Compute $\dfrac{\textrm dE}{\textrm dw_{23}},$ $\dfrac{\textrm dE}{\textrm dw_{12}},$ and $\dfrac{\textrm dE}{\textrm dw_{01}}$ for the following network. (It's easiest to do it in that order.) Put your work in an Overleaf doc.

$$ \begin{matrix} n_3 \\ \uparrow \\ n_2 \\ \uparrow \\ n_1 \\ \uparrow \\ n_0 \end{matrix} $$Show ALL your work! Also, make sure to use the simplest notation possible (for example, instead of writing $f_k(i_k),$ write $a_k$)

Check your answer by substituting the following values:

$$ y_\textrm{actual}=1 \qquad \begin{matrix} a_0 = 2 \\ a_1 = 3 \\ a_2 = 4 \\ a_3 = 5 \end{matrix} \qquad \begin{matrix} f_0'(i_0) = 6 \\ f_1'(i_1) = 7 \\ f_2'(i_2) = 8 \\ f_3'(i_3) = 9 \end{matrix} \qquad \begin{matrix} w_{01} = 10 \\ w_{12} = 11 \\ w_{23} = 12 \end{matrix} $$You should get $$ \dfrac{\textrm dE}{\textrm d w_{23}} = 288, \qquad \dfrac{\textrm dE}{\textrm d w_{12}} = 20736, \qquad \dfrac{\textrm dE}{\textrm d w_{01}} = 1064448. $$

Note: On the next couple assignments, we'll do the same exercise with progressively more advanced networks. This problem is relatively simple so that you have a chance to get used to working with the notation.

Space Empires¶

Finish creating your game level 3 strategy. (See problem 93-1 for a description of game level 3, which you should have implemented by now.) Then, implement the following strategy and run it against your level 3 strategy:

NumbersBerserkerLevel3- always buys as many scouts as possible, and each time it buys a scout, immediately sends it on a direct route to attack the opponent.

Post on #machine-learning with your strategy's stats against these strategies:

MyStrategy vs NumbersBerserker

- MyStrategy win rate: __%

- MyStrategy loss rate: __%

- draw rate: __%On the next assignment, we'll have the official matchups.

Problem 95-2¶

C++¶

Write a function calcSum(m,n) that computes the sum of the matrix product of an ascending $m \times n$ and a descending $n \times m$ array, where the array entries are taken from $\{ 1, 2, ..., mn \}.$ For example, if $m=2$ and $n=3,$ then

#include <iostream>

#include <cassert>

// define calcSum

int main() {

// write an assert for the test case m=2, n=3

}SQL¶

On sqltest.net, create the following tables:

CREATE TABLE age (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

lastname VARCHAR(30),

age VARCHAR(30)

);

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('1', 'Walton', '12');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('2', 'Sanchez', '13');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('3', 'Ng', '14');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('4', 'Smith', '15');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('5', 'Shenko', '16');

CREATE TABLE name (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

firstname VARCHAR(30),

lastname VARCHAR(30)

);

INSERT INTO `name` (`id`, `age`, `lastname`)

VALUES ('1', 'Franklin', 'Walton');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('2', 'Sylvia', 'Sanchez');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('3', 'Harry', 'Ng');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('4', 'Ishmael', 'Smith');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('5', 'Kinga', 'Shenko');Then, write a query to get the full names of the people, along with their ages, in alphabetical order of last name. The output should look like this:

Harry Ng is 14.

Sylvia Sanchez is 13.

Kinga Shenko is 16.

Ishmael Smith is 15.

Franklin Walton is 12.Tip: You'll need to use string concatenation and a join.

Problem 95-3¶

Commit + Review¶

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.

(You don't have to resolve any issues on this assignment)

Submission Template¶

For your submission, copy and paste your links into the following template:

Overleaf: _____

Repl.it link to C++ file: _____

sqltest.net link: _____

assignment-problems commit: _____

space-empires commit: _____

Created issue: _____Problem 94-1¶

Space Empires¶

Reconcile remaining discrepancies in game level 2 so we can crown the winners:

https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Then, write your first custom strategy for the level 3 game. We'll start matchups on Wednesday. We'll go through several rounds of matchups on this level since the game is starting to become more rich.

(We'll have the same extra credit prizes for 1st / 2nd / 3rd place)

Note: In decide_which_unit_to_attack, be sure to use 'player' and 'unit' instead of 'player_index' and 'unit_index'.

# combat_state is a dictionary in the form coordinates : combat_order

# {

# (1,2): [{'player': 1, 'unit': 0},

# {'player': 0, 'unit': 1},

# {'player': 1, 'unit': 1},

# {'player': 1, 'unit': 2}],

# (2,2): [{'player': 2, 'unit': 0},

# {'player': 3, 'unit': 1},

# {'player': 2, 'unit': 1},

# {'player': 2, 'unit': 2}]

# }Neural Net-Based Logistic Regressor¶

Make sure you get this problem done completely. Neural nets have a very steep learning curve and they're going to be sticking with us until the end of the semester.

a. Given $\sigma(x) = \dfrac{1}{1+e^{-x}},$ prove that $\sigma'(x) = \sigma(x) (1-\sigma(x)).$ Write this proof in an Overleaf doc.

b. In neural networks, neurons are often given "activation functions", where

node.activity = node.activation_function(weighted sum of inputs to node)In this problem, you'll extend your neural net to include activation functions. Then, you'll equip the neurons with activations so as to implement a logistic regressor.

>>> weights = {(0,2): -0.1, (1,2): 0.5}

>>> def linear_function(x):

return x

>>> def linear_derivative(x):

return 1

>>> def sigmoidal_function(x):

return 1/(1+math.exp(-x))

>>> def sigmoidal_derivative(x):

s = sigmoidal_function(x)

return s * (1 - s)

>>> activation_types = ['linear', 'linear', 'sigmoidal']

>>> activation_functions = {

'linear': {

'function': linear_function,

'derivative': linear_derivative

},

'sigmoidal': {

'function': sigmoidal_function,

'derivative': sigmoidal_derivative

}

}

>>> nn = NeuralNetwork(weights, activation_types, activation_functions)

>>> data_points = [

{'input': [1,0], 'output': [0.1]},

{'input': [1,1], 'output': [0.2]},

{'input': [1,2], 'output': [0.4]},

{'input': [1,3], 'output': [0.7]}

]

>>> for i in range(1,10001):

err = 0

for data_point in data_points:

nn.update_weights(data_point)

err += nn.calc_squared_error(data_point)

if i < 5 or i % 1000 == 0:

print('iteration {}'.format(i))

print(' gradient: {}'.format(nn.calc_gradient(data_point))

print(' updated weights: {}'.format(nn.weights))

print(' error: {}'.format(err))

print()

iteration 1

gradient: {(0, 2): 0.03184692266577955, (1, 2): 0.09554076799733865}

updated weights: {(0, 2): -0.10537885784041535, (1, 2): 0.4945789883636697}

error: 0.40480006957774683

iteration 2

gradient: {(0, 2): 0.031126202300065627, (1, 2): 0.09337860690019688}

updated weights: {(0, 2): -0.11072951375555531, (1, 2): 0.48919868238711295}

error: 0.3989945995186133

iteration 3

gradient: {(0, 2): 0.030367826123201307, (1, 2): 0.09110347836960392}

updated weights: {(0, 2): -0.11605116651884796, (1, 2): 0.4838609744178689}

error: 0.3932640005281893

iteration 4

gradient: {(0, 2): 0.029572207383720784, (1, 2): 0.08871662215116236}

updated weights: {(0, 2): -0.12134303561025003, (1, 2): 0.4785677220228999}

error: 0.3876106111541695

iteration 1000

gradient: {(0, 2): -0.04248103992359947, (1, 2): -0.12744311977079842}

updated weights: {(0, 2): -1.441870816044744, (1, 2): 0.6320712307086241}

error: 0.03103391055967604

iteration 2000

gradient: {(0, 2): -0.026576913835657988, (1, 2): -0.07973074150697396}

updated weights: {(0, 2): -1.8462575194764488, (1, 2): 0.8112377281576201}

error: 0.010469324799663702

iteration 3000

gradient: {(0, 2): -0.019389915442213898, (1, 2): -0.058169746326641694}

updated weights: {(0, 2): -2.0580006793189596, (1, 2): 0.903267622168482}

error: 0.004993174823452696

iteration 4000

gradient: {(0, 2): -0.01536481706566838, (1, 2): -0.04609445119700514}

updated weights: {(0, 2): -2.187017035077964, (1, 2): 0.9588032475551099}

error: 0.002982405174006053

iteration 5000

gradient: {(0, 2): -0.012858896793162088, (1, 2): -0.038576690379486266}

updated weights: {(0, 2): -2.2717393677429842, (1, 2): 0.995065996436664}

error: 0.00211991513136444

iteration 6000

gradient: {(0, 2): -0.011201146193726709, (1, 2): -0.033603438581180124}

updated weights: {(0, 2): -2.3298248394321606, (1, 2): 1.0198377357361068}

error: 0.0017156674543843792

iteration 7000

gradient: {(0, 2): -0.010062009597155228, (1, 2): -0.030186028791465685}

updated weights: {(0, 2): -2.370740520022862, (1, 2): 1.037244660012689}

error: 0.0015153961429219282

iteration 8000

gradient: {(0, 2): -0.009259319779522148, (1, 2): -0.027777959338566444}

updated weights: {(0, 2): -2.400083365137227, (1, 2): 1.0497070597284772}

error: 0.0014124679719747604

iteration 9000

gradient: {(0, 2): -0.008683873946383038, (1, 2): -0.026051621839149115}

updated weights: {(0, 2): -2.4213875864199608, (1, 2): 1.058744505427183}

error: 0.0013582149901490035

iteration 10000

gradient: {(0, 2): -0.00826631063707707, (1, 2): -0.024798931911231212}

updated weights: {(0, 2): -2.4369901278483534, (1, 2): 1.065357551487286}

error: 0.001329102258719855

>>> nn.weights

should be close to

{(0,2): -2.44, (1,2): 1.07}

because the data points all lie approximately on the sigmoid

output = 1/(1 + e^(-(input[0] * -2.44 + input[1] * 1.07)) )Super Important: You'll have to update your gradient descent to account for the activation functions. This will require using the chain rule. In our case, we'll have

squared_error = (y_predicted - y_actual)^2

d(squared_error)/d(weights)

= 2 (y_predicted - y_actual) d(y_predicted - y_actual)/d(weights)

= 2 (y_predicted - y_actual) [ d(y_predicted)/d(weights) - 0]

= 2 (y_predicted - y_actual) d(y_predicted)/d(weights)

y_predicted

= nodes[2].activity

= nodes[2].activation_function(nodes[2].input)

= nodes[2].activation_function(

weights[(0,2)] * nodes[0].activity

+ weights[(1,2)] * nodes[1].activity

)

= nodes[2].activation_function(

weights[(0,2)] * nodes[0].activation_function(nodes[0].input)

+ weights[(1,2)] * nodes[1].activation_function(nodes[1].input)

)

d(y_predicted)/d(weights[(0,2)])

= nodes[2].activation_derivative(nodes[2].input)

* d(nodes[2].input)/d(weights[(0,2)])

= nodes[2].activation_derivative(nodes[2].input)

* d(weights[(0,2)] * nodes[0].activity + weights[(1,2)] * nodes[1].activity)/d(weights[(0,2)])

= nodes[2].activation_derivative(nodes[2].input)

* nodes[0].activity

by the same reasoning as above:

d(y_predicted)/d(weights[(1,2)]

= nodes[2].activation_derivative(nodes[2].input)

* nodes[1].activityNote: If no activation_functions variable is passed in, then assume all activation functions are linear.

Problem 94-2¶

HashTable¶

Write a class HashTable that generalizes the hash table you previously wrote. This class should store an array of buckets, and the hash function should add up the alphabet indices of the input string and mod the result by the number of buckets.

>>> ht = HashTable(num_buckets = 3)

>>> ht.buckets

[[], [], []]

>>> ht.hash_function('cabbage')

2 (because 2+0+1+1+0+6+4 mod 3 = 14 mod 3 = 2)

>>> ht.insert('cabbage', 5)

>>> ht.buckets

[[], [], [('cabbage',5)]]

>>> ht.insert('cab', 20)

>>> ht.buckets

[[('cab', 20)], [], [('cabbage',5)]]

>>> ht.insert('c', 17)

>>> ht.buckets

[[('cab', 20)], [], [('cabbage',5), ('c',17)]]

>>> ht.insert('ac', 21)

>>> ht.buckets

[[('cab', 20)], [], [('cabbage',5), ('c',17), ('ac', 21)]]

>>> ht.find('cabbage')

5

>>> ht.find('cab')

20

>>> ht.find('c')

17

>>> ht.find('ac')

21SQL¶

This is a really quick problem, mostly just getting you to learn the ropes of the process we'll be using for doing SQL problems going forward (now that we're done with SQL Zoo).

On https://sqltest.net/, create table with the following script:

CREATE TABLE people (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

name VARCHAR(30) NOT NULL,

age VARCHAR(50)

);

INSERT INTO `people` (`id`, `name`, `age`)

VALUES ('1', 'Franklin', '12');

INSERT INTO `people` (`id`, `name`, `age`)

VALUES ('2', 'Sylvia', '13');

INSERT INTO `people` (`id`, `name`, `age`)

VALUES ('3', 'Harry', '14');

INSERT INTO `people` (`id`, `name`, `age`)

VALUES ('4', 'Ishmael', '15');

INSERT INTO `people` (`id`, `name`, `age`)

VALUES ('5', 'Kinga', '16');Then select all teenage people whose names do not start with a vowel, and order by oldest first.

In order to run the query, you need to click the "Select Database" dropdown in the very top-right corner (so top-right that it might partially run off your screen) and select MySQL 5.6.

This is what your result should be:

id name age

5 Kinga 16

3 Harry 14

2 Sylvia 13Copy the link where it says "Link for sharing your example:". This is what you'll submit for your assignment.

Problem 94-3¶

There will be a quiz on Friday over things that we've done with C++, Haskell, SQL, and Neural Nets.

Commit + Review¶

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.

(You don't have to resolve any issues on this assignment)

Submission Template¶

For your submission, copy and paste your links into the following template:

Repl.it link to custom level 3 strategy: ____

Overleaf link to proof of derivative of sigmoid: ____

Repl.it link to neural network: ____

Repl.it link to hash table: ____

SQLtest.net link: ____

Commit link for space-empires repo: _____

Commit link for assignment-problems repo: _____

Commit link for machine-learning repo: _____

Created issue: _____Problem 93-1¶

Space Empires¶

Reconcile higlighted discrepancies

https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Implement game level 3

Regular (repeated) economic phases -- once every turn

Change the starting CP back to 0 (now that we have repeated economic phases, we no longer need the extra CP boost at the beginning).

3 movement rounds on each turn

7x7 board - starting positions are now (3,0) and (3,6)

Since we had to postpone the neural net problem, you can use the extra time to begin implementing your custom player for the level 3 game (we'll have the level 3 battles soon).

Problem 93-2¶

Hash Tables¶

Location: assignment-problems/hash_table.py

Under the hood, Python dictionaries are hash tables.

The most elementary (and inefficient) version of a hash table would be a list of tuples. For example, if we wanted to implement the dictionary {'a': [0,1], 'b': 'abcd', 'c': 3.14}, then we'd have the following:

list_of_tuples = [('a', [0,1]), ('b', 'abcd'), ('c', 3.14)]To add a new key-value pair to the dictionary, we'd just append the corresponding tuple to list_of_tuples, and to look up the value for some key, we'd just loop through list_of_tuples until we got to the tuple with the key we wanted (and return the value).

But searching through a long array is very slow. So, to be more efficient, we use several list_of_tuples (which we'll call "buckets"), and we use a hash_function to tell us which bucket to put the new key-value pair in.

Complete the code below to implement a special case of an elementary hash table. We'll expand on this example soon, but let's start with something simple.

array = [[], [], [], [], []] # has 5 empty "buckets"

def hash_function(string):

# return the sum of character indices in the string

# (where "a" has index 0, "b" has index 1, ..., "z" has index 25)

# modulo 5

# for now, let's just assume the string consists of lowercase

# letters with no other characters or spaces

def insert(array, key, value):

# apply the hash function to the key to get the bucket index.

# then append the (key, value) pair to the bucket.

def find(array, key):

# apply the hash function to the key to get the bucket index.

# then loop through the bucket until you get to the tuple with the desired key,

# and return the corresponding value.Here's an example of how the hash table will work:

>>> print(array)

array = [[], [], [], [], []]

>>> insert(array, 'a', [0,1])

>>> insert(array, 'b', 'abcd')

>>> insert(array, 'c', 3.14)

>>> print(array)

[[('a',[0,1])], [('b','abcd')], [('c',3.14)], [], []]

>>> insert(array, 'd', 0)

>>> insert(array, 'e', 0)

>>> insert(array, 'f', 0)

>>> print(array)

[[('a',[0,1]), ('f',0)], [('b','abcd')], [('c',3.14)], [('d',0)], [('e',0)]]Test your code as follows:

alphabet = 'abcdefghijklmnopqrstuvwxyz'

for i, char in enumerate(alphabet):

key = 'someletters'+char

value = [i, i**2, i**3]

insert(array, key, value)

for i, char in enumerate(alphabet):

key = 'someletters'+char

output_value = find(array, key)

desired_value = [i, i**2, i**3]

assert output_value == desired_valueShell¶

Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

https://www.hackerrank.com/challenges/text-processing-in-linux-the-sed-command-3/problem

SQL¶

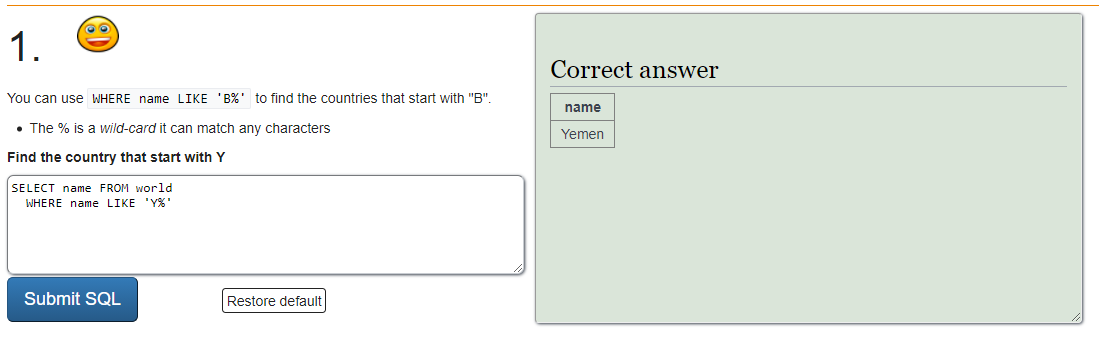

Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/Using_Null (queries 7, 8, 9, 10)

Problem 93-3¶

Commit + Review¶

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

Submission Template¶

For your submission, copy and paste your links into the following template:

Repl.it link to neural network: ____

Repl.it link to hash table: ____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for assignment-problems repo: _____

Commit link for machine-learning repo: _____

Created issue: _____

Resolved issue: _____Problem 92-1¶

a. Once your strategy is finalized, Slack it to me and I'll upload it here.

https://github.com/eurisko-us/eurisko-us.github.io/tree/master/files/strategies/cohort-1/level-2

If your strategy is getting crushed by NumbersBerserker, keep in mind that it's okay to copy NumbersBerserker and then tweak it a little bit with your own spin. Your strategy should have some original component, but it does not need to be 100% original (or even mostly original).

Then, once everyone's strategies are submitted, download the strategies from the above folder and run all pairwise battles for 500 games.

Put your data in the spreadsheet:

https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Remember to switch the order of the players halfway through the simulation so that each player goes first an equal number of times.

Seed the games: game 1 has seed 1, game 2 has seed 2, and so on. This way, we should all get exactly the same results.

Assuming our games match up so that we can actually agree about who won, there will be prizes:

- 1st place: 50% extra credit on the assignment

- 2nd place: 30% extra credit on the assignment

- 3rd place: 10% extra credit on the assignment

b. Time for an introduction to neural nets! In this problem, we'll create a really simple neural network that is essentially a "neural net"-style implementation of linear regression. We'll start off with something simple and familiar, but we'll implement much more advanced models in the near future.

Note: It seems like we need to merge our graph library into our machine-learning library. So, let's do that. The src your machine-learning library should now look like this:

src/

- models/

- linear_regressor.py

- neural_network.py

- ...

- graphs/

- weighted_graph.py

- ...(If you have a better idea for the structure of our library, feel free to do it your way and bring it up for discussion during the next class)

Create a NeuralNetwork class that inherits from weighted graph. Pass in dictionary of weights to determine connectivity and initial weights.

>>> weights = {(0,2): -0.1, (1,2): 0.5}

>>> nn = NeuralNetwork(weights)

This is a graphical representation of the model:

nodes[2] ("output layer")

^ ^

/ \

weights[(0,2)] weights[(1,2)]

^ ^

/ \

nodes[0] nodes[1] ("input layer")To make a prediction, our simple neural net computes a weighted sum of the input values. (Again, this will become more involved in the future, but let's not worry about that just yet.)

>>> nn.predict([1,3])

1.4

behind the scenes:

assign nodes[0] a value of 1 and nodes[1] a value of 3,

and then return the following:

weights[(0,2)] * nodes[0].value + weights[(1,2)] * nodes[1].value

= -0.1 * 1 + 0.5 * 3

= 1.4If we know the output that's supposed to be associated with a given input, we can compute the error in the prediction.

We'll use the squared error, so that we can frame the problem of fitting the neural network as "choosing weights which minimize the squared error".

To find the weights which minimize the squared error, we can perform gradient descent. As we'll see in the future, calculating the gradient of the weights can get a little tricky (it requires a technique called "backpropagation"). But for now, you can just hard-code the process for this particular network.

>>> data_point = {'input': [1,3], 'output': [7]}

>>> nn.calc_squared_error(data_point)

31.36 [ because (7-1.4)^2 = 5.6^2 = 31.36 ]

>>> nn.calc_gradient(data_point)

{(0,2): -11.2, (1,2): -33.6}

behind the scenes:

squared_error = (y_actual - y_predicted)^2

d(squared_error)/d(weights)

= 2 (y_actual - y_predicted) d(y_actual - y_predicted)/d(weights)

= 2 (y_actual - y_predicted) [ 0 - d(y_predicted)/d(weights) ]

= -2 (y_actual - y_predicted) d(y_predicted)/d(weights)

remember that

y_predicted = weights[(0,2)] * nodes[0].value + weights[(1,2)] * nodes[1].value

so

d(y_predicted)/d(weights[(0,2)]) = nodes[0].value

d(y_predicted)/d(weights[(1,2)]) = nodes[1].value

Therefore

d(squared_error)/d(weights[(0,2)])

= -2 (y_actual - y_predicted) d(y_predicted)/d(weights[(0,2)])

= -2 (y_actual - y_predicted) nodes[0].value

= -2 (7 - 1.4) (1)

= -11.2

d(squared_error)/d(weights[(1,2)])

= -2 (y_actual - y_predicted) d(y_predicted)/d(weights[(1,2)])

= -2 (y_actual - y_predicted) nodes[1].value

= -2 (7 - 1.4) (3)

= -33.6Once we've got the gradient, we can update the weights using gradient descent.

>>> nn.update_weights(data_point, learning_rate=0.01)

new_weights = old_weights - learning_rate * gradient

= {(0,2): -0.1, (1,2): 0.5}

- 0.01 * {(0,2): -11.2, (1,2): -33.6}

= {(0,2): -0.1, (1,2): 0.5}

+ {(0,2): 0.112, (1,2): 0.336}

= {(0,2): 0.012, (1,2): 0.836}If we repeatedly loop through a dataset and update the weights for each data point, then we should get a model whose error is minimized.

Caveat: the minimum will be a local minimum, which is not guaranteed to be a global minimum.

Here is a test case with some data points that are on the line $y=1+2x.$ Our network is set up to fit any line of the form $y = \beta_0 \cdot 1 + \beta_1 \cdot x,$ where $\beta_0 = $ weights[(0,2)] and $\beta_1=$ weights[(1,2)].

Note that this line can be written as

output = 1 * input[0] + 2 * input[1]In this particular case, the weights should converge to the true values (1 and 2).

>>> weights = {(0,2): -0.1, (1,2): 0.5}

>>> nn = NeuralNetwork(weights)

>>> data_points = [

{'input': [1,0], 'output': [1]},

{'input': [1,1], 'output': [3]},

{'input': [1,2], 'output': [5]},

{'input': [1,3], 'output': [7]}

]

>>> for _ in range(1000):

for data_point in data_points:

nn.update_weights(data_point)

>>> nn.weights

should be really close to

{(0,2): 1, (1,2): 2}

because the data points all lie on the line

output = input[0] * 1 + input[1] * 2Once you've got your final weights, post them on #results.

Problem 92-2¶

Quiz Corrections¶

Originally I was going to put the hash table problem here, but I figured we should discuss it in class first. Also, we should do quiz corrections. So it will be on the next assignment instead.

For this assignment, please correct any errors on your quiz (if you got a score under 100%). You'll just need to submit your repl.it links again, with the corrected code.

Remember that we went through the quiz during class, so if you have any questions or need any help, look at the recording first.

Note: Since this quiz corrections problem is much lighter than the usual problem that would go in its place, there will be a couple more Shell and SQL problems than usual.

Shell¶

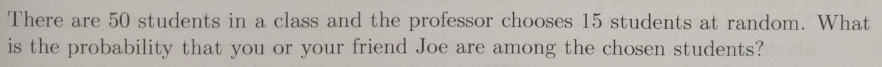

Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

Resources:

http://www.robelle.com/smugbook/regexpr.html

https://www.gnu.org/software/sed/manual/html_node/Regular-Expressions.html

Problems:

https://www.hackerrank.com/challenges/text-processing-in-linux-the-grep-command-4/problem

https://www.hackerrank.com/challenges/text-processing-in-linux-the-grep-command-5/problem

https://www.hackerrank.com/challenges/text-processing-in-linux-the-sed-command-1/problem

https://www.hackerrank.com/challenges/text-processing-in-linux-the-sed-command-2/problem

SQL¶

Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/Using_Null (queries 1, 2, 3, 4, 5, 6)

Problem 92-3¶

Commit + Review¶

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

Submission Template¶

For your submission, copy and paste your links into the following template:

Repl.it link to neural network: ____

Repl.it links to quiz corrections (if applicable): _____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for machine-learning repo: _____

Created issue: _____

Resolved issue: _____Problem 91-1¶

a. Re-run your decision tree on the sex prediction problem. Make 5 train-test splits of 80% train and 20% test, like we originally did. Now that your Gini trees match up from the previous assignment, they should match up here. Also, make sure to propagate any changes in your Gini tree to your random tree. Our random forest results should be pretty close as well.

b. Create a custom strategy for the level 2 game. Test it against NumbersBerserkerLevel2 and FlankerLevel2. On Wednesday's assignment, we'll have our strategies battle against each other.

Put your results in the usual spreadsheet:

https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Problem 91-2¶

Commit¶

Commit your code to Github.

We'll skip reviews on this assignment, to save you a bit of time.

Submission Template¶

For your submission, copy and paste your links into the following template:

Repl.it link to hash table: _____

Commit link for space-empires repo: _____

Commit link for assignment-problems repo: _____Problem 90-1¶

This weekend, your only primary problem is to resolve discrepancies in your Gini decision tree & games (both level 1 and level 2).

https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Please be sure to get the game discrepancies resolved, so that we can have our custom level 2 strategies battle next week. Then, I'll let Jason know we're ready to speak with Prof. Wierman about designing optimal strategies for our level 2 game.

Problem 90-2¶

C++¶

At the beginning of the year, we wrote a Python function called simple_sort that sorts a list by repeatedly finding the smallest element and appending it to a new list.

Now, you will sort a list in C++ using a similar technique. However, because working with arrays in C++ is a bit trickier, we will modify the implementation so that it only involves the use of a single array. The way we do this is by swapping:

- Find the smallest element in the array

- Swap it with the first element of the array

- Find the next-smallest element in the array

- Swap it with the second element of the array

- ...

For example:

array: [30, 50, 20, 10, 40]

indices to consider: 0, 1, 2, 3, 4

elements to consider: 30, 50, 20, 10, 40

smallest element: 10

swap with first element: [10, 50, 20, 30, 40]

---

array: [10, 50, 20, 30, 40]

indices to consider: 1, 2, 3, 4

elements to consider: 50, 20, 30, 40

smallest element: 20

swap with second element: [10, 20, 50, 30, 40]

---

array: [10, 20, 50, 30, 40]

indices to consider: 2, 3, 4

elements to consider: 50, 30, 40

smallest element: 30

swap with second element: [10, 20, 30, 50, 40]

...

final array: [10, 20, 30, 40, 50]Write your code in the template below.

# include <iostream>

# include <cassert>

int main()

{

int array[5]{ 30, 50, 20, 10, 40 };

// your code here

std::cout << 'Testing...\n';

assert(array[0]==10);

assert(array[1]==20);

assert(array[2]==30);

assert(array[3]==40);

assert(array[4]==50);

std::cout << 'Succeeded';

return 0;

}Shell¶

Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

Resources:

http://www.thegeekstuff.com/2009/03/15-practical-unix-grep-command-examples/

Problems:

https://www.hackerrank.com/challenges/text-processing-in-linux-the-grep-command-1/problem

https://www.hackerrank.com/challenges/text-processing-in-linux-the-grep-command-2/problem

https://www.hackerrank.com/challenges/text-processing-in-linux-the-grep-command-3/problem

SQL¶

Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/More_JOIN_operations (queries 13, 14, 15)

Problem 90-3¶

Commit + Review¶

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

Submission Template¶

For your submission, copy and paste your links into the following template:

Repl.it link to C++ code: _____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for space-empires repo: _____

Commit link for machine-learning repo: _____

Commit link for assignment-problems repo: _____

Created issue: _____

Resolved issue: _____Problem 89-1¶

On this problem, we'll do some debugging based on the results from our spreadsheet:

https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Debugging¶

a. Compare your results to your classmates' results for Indices of misclassified data points (zero-indexed: the index of the first data point in the dataset would be index 0). If you and a classmate have different results, do some pair debugging to figure out what caused the difference and how you guys need to reconcile it.

b. Compare your results to your classmates' results for Flanker vs Berserker | Simulate 10 games with random seeds 1-10; list game numbers on which Flanker wins. If you and a classmate have different results, do some pair debugging to figure out what caused the difference and how you guys need to reconcile it.

c. Modify your level 2 game so that each player starts with 4 shipyards in addition to 3 scouts. (If a player doesn't start out with shipyards, then the NumberBerserker strategy can't actually do what it's intended to do.)

Then, re-run the game level 2 matchups and put your results in the sheet (put them on the sheet for the current assignment, #89).

Random Forest¶

d. Make the following adjustment to your random forest:

In your random decision tree, create a

training_percentageparameter that governs the percent of the training data that you actually use to fit the model.In our case, we have about 70 records, and in each test-train split, we're using 80% as training data, so that's about 56 records. Now, if we set

training_percentage = 0.3, then we randomly choose $0.3 \times 56 \approx 17$ records from the training data to actually fit the decision tree.When randomly selecting the records, use random selection with replacement. In other words, it's okay to select duplicate data records.

When you initialize the random forest, pass a

training_percentageparameter that, in turn, gets passed to the random decision trees.The reason why choosing

training_percentage < 1can be useful is that it speeds up the time to train the random forest, and also, it allows different models to get different "perspectives" on the data, thereby creating a more diverse "hive mind" (and higher diversity generally leads to higher performance when it comes to ensemble models, i.e. models consisting of many smaller sub-models)

e. On the sex prediction dataset, train the following models on the first half of the data and test on the second half of the data.

A single random decision tree with

max_depth = 4andtraining_percentage = 0.3.Random forest with 10 trees with

max_depth = 4andtraining_percentage = 0.3.Random forest with 100 trees with

max_depth = 4andtraining_percentage = 0.3.Random forest with 1,000 trees with

max_depth = 4andtraining_percentage = 0.3.Random forest with 10,000 trees with

max_depth = 4andtraining_percentage = 0.3.

Paste the accuracy into the spreadsheet.

Problem 89-2¶

Haskell¶

First, observe the following Haskell code which computes the sum of all the squares under 1000:

>>> sum (takeWhile (<1000) (map (^2) [1..]))

10416(If you don't see why this works, then run each part of the expression: first map (^2) [1..], and then takeWhile (<1000) (map (^2) [1..]), and then the full expression sum (takeWhile (<1000) (map (^2) [1..])).)

Now, recall the Collatz conjecture (if you don't remember it, ctrl+F "collatz conjecture" to jump to the problem where we covered it).

The following Haskell code can be used to recursively generate the sequence or "chain" of Collatz numbers, starting with an initial number n.

chain :: (Integral a) => a -> [a]

chain 1 = [1]

chain n

| even n = n:chain (n `div` 2)

| odd n = n:chain (n*3 + 1)Here are the chains for several initial numbers:

>>> chain 10

[10,5,16,8,4,2,1]

>>> chain 1

[1]

>>> chain 30

[30,15,46,23,70,35,106,53,160,80,40,20,10,5,16,8,4,2,1]Your problem: Write a Haskell function firstNumberWithChainLengthGreaterThan n that finds the first number whose chain length is at least n.

Check: firstNumberWithChainLengthAtLeast 15 should return 7.

To see why this check works, observe the first few chains shown below:

1: [1] (length 1)

2: [2,1] (length 2)

3: [3,10,5,16,8,4,2,1] (length 8)

4: [4,2,1] (length 3)

5: [5,16,8,4,2,1] (length 6)

6: [6,3,10,5,16,8,4,2,1] (length 9)

7: [7,22,11,34,17,52,26,13,40,20,10,5,16,8,4,2,1] (length 17)7 is the first number whose chain is at least 15 numbers long.

Shell¶

Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

Helpful resources:

https://www.geeksforgeeks.org/awk-command-unixlinux-examples/

https://www.thegeekstuff.com/2010/02/awk-conditional-statements/

Problems:

SQL¶

Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/More_JOIN_operations (queries 9, 10, 11, 12)

Problem 89-3¶

Commit + Review¶

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

Submission Template¶

For your submission, copy and paste your links into the following template:

Repl.it link to Haskell code: _____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for space-empires repo: _____

Commit link for machine-learning repo: _____

Commit link for assignment-problems repo: _____

Created issue: _____

Resolved issue: _____Problem 89-4¶

There will be a 45-minute quiz that you can take any time on Thursday. (We don't have school Friday.)

The quiz will cover C++ and Haskell.

For C++, you will need to be comfortable working with arrays.

For Haskell, you'll need to be comfortable working with list comprehensions and compositions of functions.

You will need to write C++ and Haskell functions to calculate some values. It will be somewhat similar to the meta-Fibonacci sum problem, except the computation will be different (and simpler).

Problem 88-1¶

This is the results spreadsheet that you'll paste your results into: https://docs.google.com/spreadsheets/d/1zUqn5OvF3_U3XJ_d25vtBiFkRB3RgSQSXNv6wga8aeI/edit?usp=sharing

Gini Tree¶

On the sex prediction dataset, train a Gini decision tree on the first half of the data and test on the second half of the data.

(If there's an odd number of data points, then round so that the first half of the data will have one more record than the second half)

Paste your prediction accuracy into the spreadsheet, along with the indices of any misclassified data points (zero-indexed: the index of the first data point in the dataset would be index 0).

Space Empires Level 1¶