Assignment Problems for Cohort 2¶

Future Problem¶

Future Problem¶

Future Problem¶

socket connections -- build calculator

Problem 55-1¶

Location: assignment-problems/magic_square.py

In this problem, you will solve for all arrangements of digits $1,2,\ldots, 9$ in a $3 \times 3$ "magic square" where all the rows, columns, and diagonals add up to $15$ and no digits are repeated.

a.

First, create a function is_valid(arr) that checks if a possibly-incomplete array is a valid magic square "so far". In order to be valid, all the rows, columns, and diagonals in an array that have been completely filled in must sum to $15.$

>>> arr1 = [[1,2,None],

[None,3,None],

[None,None,None]]

>>> is_valid(arr1)

True (because no rows, columns, or diagonals are completely filled in)

>>> arr2 = [[1,2,None],

[None,3,None],

[None,None,4]]

>>> is_valid(arr2)

False (because a diagonal is filled in and it doesn't sum to 15)

>>> arr3 = [[1,2,None],

[None,3,None],

[5,6,4]]

>>> is_valid(arr3)

False (because a diagonal is filled in and it doesn't sum to 15)

(it doesn't matter that the bottom row does sum to 15)

>>> arr4 = [[None,None,None],

[None,3,None],

[5,6,4]]

>>> is_valid(arr4)

True (because there is one row that's filled in and it sums to 15)b.

Now, write a script to start filling in numbers of the array -- but whenever you reach a configuration that can no longer become a valid magic square, you should not explore that configuration any further. Once you reach a valid magic square, print it out.

- Tip: An ugly but straightforward way to solve this is to use 9 nested

forloops, along withcontinuestatements where appropriate. (Acontinuestatement allows you to immediately continue to the next item in aforloop, without executing any of the code below thecontinuestatement.)

Some of the first steps are shown below to give a concrete demonstration of the procedure:

Filling...

[[_,_,_],

[_,_,_],

[_,_,_]]

[[1,_,_],

[_,_,_],

[_,_,_]]

[[1,2,_],

[_,_,_],

[_,_,_]]

[[1,2,3],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

[[1,2,4],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

[[1,2,5],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

...

[[1,2,9],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

[[1,3,2],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

[[1,3,4],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

[[1,3,5],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

...

[[1,3,9],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

[[1,4,2],

[_,_,_],

[_,_,_]]

^ is no longer a valid magic square

...

[[1,5,9],

[_,_,_],

[_,_,_]]

[[1,5,9],

[2,_,_],

[_,_,_]]

[[1,5,9],

[2,3,_],

[_,_,_]]

[[1,5,9],

[2,3,4],

[_,_,_]]

^ is no longer a valid magic square

[[1,5,9],

[2,3,5],

[_,_,_]]

^ is no longer a valid magic square

...Here is a rough outline of the structure:

arr = (initialize it with Nones)

for num1 in range(1,10):

clear out the array and put num1 in it

if not is_valid(arr):

continue

for num2 in range(1,10): # modify this range so that it doesn't include num1

clear out the array and put num1, num2 in it

if not is_valid(arr):

continue

for num3 in range(1,10): # modify this range so that it doesn't include num1 nor num2

clear out the array and put num1, num2, num3 in it

if not is_valid(arr):

continue

... and so onProblem 55-2¶

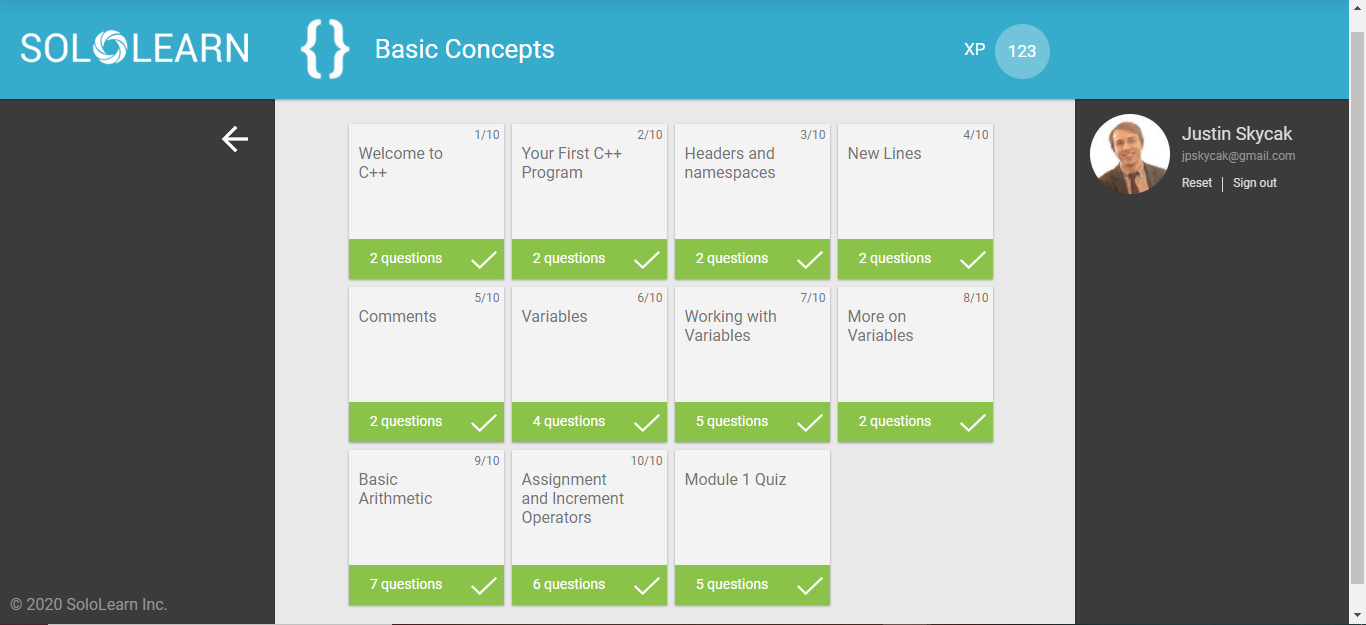

C++¶

Implement the metaFibonacciSum function in C++:

# include <iostream>

# include <cassert>

int metaFibonacciSum(int n)

{

// return the result immediately if n<2

// otherwise, construct a an array called "terms"

// that contains the Fibonacci terms at indices

// 0, 1, ..., n

// construct an array called "extendedTerms" that

// contains the Fibonacci terms at indices

// 0, 1, ..., a_n (where a_n is the nth Fibonacci term)

// when you fill up this array, many of the terms can

// simply copied from the existing "terms" array. But

// if you need additional terms, you'll have to compute

// them the usual way (by adding the previous 2 terms)

// then, create an array called "partialSums" that

// contains the partial sums S_0, S_1, ..., S_{a_n}

// finally, add up the desired partial sums,

// S_{a_0} + S_{a_1} + ... + S_{a_n},

// and return this result

}

int main()

{

std::cout << "Testing...\n";

assert(metaFibonacciSum(6)==74);

std::cout << "Success!";

return 0;

}Shell¶

Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

Helpful resource: https://www.geeksforgeeks.org/awk-command-unixlinux-examples/

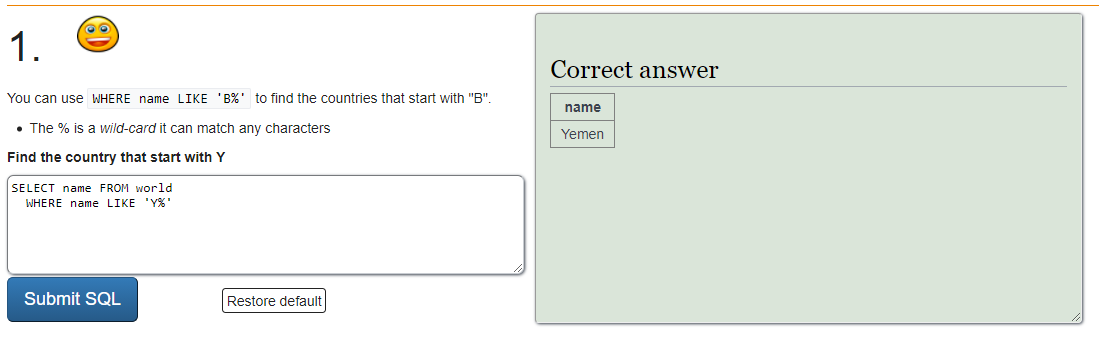

SQL¶

Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/More_JOIN_operations (queries 5, 6, 7, 8)

Problem 55-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Repl.it link to magic square solver: ___

Repl.it link to C++ code: _____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for assignment-problems repo: _____

Created issue: _____

Resolved issue: _____Problem 54-1¶

Primary problems; 60% of assignment grade; 60 minutes estimate

In your Graph class, create a method graph.set_breadth_first_distance_and_previous(starting_node_index) that does a breadth-first traversal and sets the attributes node.distance and node.previous during the traversal.

Whenever you're at a current_node, and you check the neighbors, make the following updates

set

neighbor.previousas thecurrent_nodeset

neighbor.distance = current_node.distance + 1

Then, create the following methods:

graph.calc_distance(starting_node_index, ending_node_index)- computes the distance between the starting node and the ending node. All you have to do is rungraph.set_breadth_first_distance_and_previous(starting_node_index)and then return thedistanceof theending_node.graph.calc_shortest_path(starting_node_index, ending_node_index)- computes the sequence of node indices on the shortest path between the starting node and the ending node. All you have to do isrun

graph.set_breadth_first_distance_and_previous(starting_node_index)start at the terminal node and repeatedly go to the previous node until you get to the initial node

keep track of all the nodes you visit (this is the shortest path in reverse)

return the path in order from the initial node index to the terminal node index (you'll have to reverse the reversed path)

Here are some tests:

>>> edges = [(0,1),(1,2),(1,3),(3,4),(1,4),(4,5)]

>>> graph = Graph(edges)

at this point, the graph looks like this:

0 -- 1 -- 2

| \

3--4 -- 5

>>> graph.calc_distance(0,4)

2

>>> graph.calc_distance(5,2)

3

>>> graph.calc_distance(0,5)

3

>>> graph.calc_distance(4,1)

1

>>> graph.calc_distance(3,3)

0

>>> graph.calc_shortest_path(0,4)

[0, 1, 4]

>>> graph.calc_shortest_path(5,2)

[5, 4, 1, 2]

>>> graph.calc_shortest_path(0,5)

[0, 1, 4, 5]

>>> graph.calc_shortest_path(4,1)

[4, 1]

>>> graph.calc_shortest_path(3,3)

[3]Problem 54-2¶

Supplemental problems; 30% of assignment grade; 60 minutes estimate

Location: assignment-problems

Haskell¶

Let $a_k$ be the $k$th Fibonacci number and let $S_k$ be the sum of the first $k$ Fibonacci numbers. Write a function metaFibonacciSum that takes an input $n$ and computes the sum

For example, if we wanted to compute the result for n=6, then we'd need to

compute the first $6$ Fibonacci numbers: $$ a_0=0, a_1=1, a_2=1, a_3=2, a_4=3, a_5=5, a_6=8 $$

compute the first $8$ Fibonacci sums: $$ \begin{align*} S_0 &= 0 \\ S_1 &= 0 + 1 = 1 \\ S_2 &= 0 + 1 + 1 = 2 \\ S_3 &= 0 + 1 + 1 + 2 = 4 \\ S_4 &= 0 + 1 + 1 + 2 + 3 = 7 \\ S_5 &= 0 + 1 + 1 + 2 + 3 + 5 = 12 \\ S_6 &= 0 + 1 + 1 + 2 + 3 + 5 + 8 = 20 \\ S_7 &= 0 + 1 + 1 + 2 + 3 + 5 + 8 + 13 = 33 \\ S_8 &= 0 + 1 + 1 + 2 + 3 + 5 + 8 + 13 + 21 = 54 \\ \end{align*} $$

Add up the desired sums:

$$ \begin{align*} \sum\limits_{k=0}^6 S_{a_k} &= S_{a_0} + S_{a_1} + S_{a_2} + S_{a_3} + S_{a_4} + S_{a_5} + S_{a_6} \\ &= S_{0} + S_{1} + S_{1} + S_{2} + S_{3} + S_{5} + S_{8} \\ &= 0 + 1 + 1 + 2 + 4 + 12 + 54 \\ &= 74 \end{align*} $$Here's a template:

-- first, define a recursive function "fib"

-- to compute the nth Fibonacci number

-- once you've defined "fib", proceed to the

-- steps below

firstKEntriesOfSequence k = -- your code here; should return the list [a_0, a_1, ..., a_k]

kthPartialSum k = -- your code here; returns a single number

termsToAddInMetaSum n = -- your code here; should return the list [S_{a_0}, S_{a_1}, ..., S_{a_k}]

metaSum n = -- your code here; returns a single number

main = print (metaSum 6) -- should come out to 74Shell¶

Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

Helpful resource: https://www.theunixschool.com/2012/07/10-examples-of-paste-command-usage-in.html

https://www.hackerrank.com/challenges/paste-1/problem

https://www.hackerrank.com/challenges/paste-2/problem

SQL¶

Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/More_JOIN_operations (queries 1, 2, 3, 4)

Problem 54-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Repl.it link to graph/tests/test_graph.py: ___

Repl.it link to Haskell code: _____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for space-empires repo: _____

Commit link for assignment-problems repo: _____

Created issue: _____

Resolved issue: _____Problem 53-1¶

Primary problems; 60% of assignment grade; 60 minutes estimate

In your LinearRegressor and LogisticRegressor, in your predict method, update your observation as follows:

If there are any non-interaction terms (i.e. doesn't contain a '*' symbol) as dataframe columns, that do not appear in your observation, then set their observation values to $0.$

If there are any interaction terms that appear as dataframe columns, but do not appear in your observation, then generate them.

Then, you should be able to run the following tests (taken from Problem 52-1)

# test 8 slices of beef + mayo

>>> observation = {'beef': 8, 'mayo': 1}

>>> linear_regressor.predict(observation)

11.34

>>> logistic_regressor.predict(observation)

9.72

Note: under the hood, the observation should be transformed like this:

initial input:

{'beef': 8, 'mayo': 1}

fill in 0's for any missing non-interaction variables:

{'beef': 8, 'pb': 0, 'mayo': 1, 'jelly': 0}

fill in missing interaction terms that appear in the dataset:

{'beef': 8, 'pb': 0, 'mayo': 1, 'jelly': 0,

'beef * pb': 0, 'beef * mayo': 8, 'beef * jelly': 0,

'pb * mayo': 0, 'pb * jelly': 0,

'mayo * jelly': 0}

# test 4 tbsp of pb + 8 slices of beef + mayo

>>> observation = {'beef': 8, 'pb': 4, 'mayo': 1}

>>> linear_regressor.predict(observation)

3.62

>>> logistic_regressor.predict(observation)

0.77

# test 8 slices of beef + mayo + jelly

>>> observation = {'beef': 8, 'mayo': 1, 'jelly': 1}

>>> linear_regressor.predict(observation)

2.79

>>> logistic_regressor.predict(observation)

0.79b. Submit quiz corrections for any problems you missed. We went over the quiz in the first part of the class recording: https://vimeo.com/507190028

(Note: if you did not miss any problems, then you don't have to submit anything)

Problem 53-2¶

Supplemental problems; 30% of assignment grade; 60 minutes estimate

Location: assignment-problems

a. Skim the following section of http://learnyouahaskell.com/higher-order-functions.

Function composition

Consider the function $$ f(x,y) = \max \left( x, -\tan(\cos(y)) \right) $$

This function can be implemented as

>>> f x y = negate (max (x tan (cos y)))or, we can implement it using function composition notation as follows:

>>> f x = negate . max x . tan . cosNote that although max is a function of two variables, max x is a function of one variable (since one of the inputs is already supplied). So, we can chain it together with other single-variable functions.

Previously, you wrote a function tail' in Tail.hs that finds the last n elements of a list by reversing the list, finding the head n elements of the reversed list, and then reversing the result.

Rewrite the function tail' using composition notation, so that it's cleaner. Run Tail.hs again to make sure it still gives the same output as before.

b. Write a function isPrime that determines whether a nonnegative integer x is prime. You can use the same approach that you did with one of our beginning Python problems: loop through numbers between 2 and x-1 and see if you can find any factors.

Note that neither 0 nor 1 are prime.

Here is a template for your file isPrime.cpp:

#include <iostream>

#include <cassert>

bool isPrime(int x)

{

// your code here

}

int main()

{

assert(!isPrime(0));

assert(!isPrime(1));

assert(isPrime(2));

assert(isPrime(3));

assert(!isPrime(4));

assert(isPrime(5));

assert(isPrime(7));

assert(!isPrime(9));

assert(isPrime(11));

assert(isPrime(13));

assert(!isPrime(15));

assert(!isPrime(16));

assert(isPrime(17));

assert(isPrime(19));

assert(isPrime(97));

assert(!isPrime(99));

assert(!isPrime(99));

assert(isPrime(13417));

std::cout << "Success!";

return 0;

}Your program should work like this

>>> g++ isPrime.cpp -o isPrime

>>> ./isPrime

Success!c. Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

Here's a reference to the sort command: https://www.thegeekstuff.com/2013/04/sort-files/

Note that the "tab" character must be specified as $'\t'.

These problems are super quick, so we'll do several.

https://www.hackerrank.com/challenges/text-processing-sort-5/tutorial

https://www.hackerrank.com/challenges/text-processing-sort-6/tutorial

https://www.hackerrank.com/challenges/text-processing-sort-7/tutorial

d. Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/The_JOIN_operation (queries 12, 13)

Problem 53-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to Github.

Make 1 GitHub issue on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Repl.it link to machine-learning/tests/test_logistic_regressor.py: _____

Repl.it link to machine-learning/tests/test_linear_regressor.py: _____

Overleaf link to quiz corrections: _____

Repl.it link to Haskell code: _____

Repl.it link to C++ code: _____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for machine-learning repo: _____

Commit link for assignment-problems repo: _____

Created issue: _____

Resolved issue: _____Problem 52-1¶

Primary problems; 60% of assignment grade; 60 minutes estimate

a. Consider the sandwich prediction task again.

>>> df = DataFrame.from_array(

[[0, 0, [], 1],

[0, 0, ['mayo'], 1],

[0, 0, ['jelly'], 4],

[0, 0, ['mayo', 'jelly'], 0],

[5, 0, [], 4],

[5, 0, ['mayo'], 8],

[5, 0, ['jelly'], 1],

[5, 0, ['mayo', 'jelly'], 0],

[0, 5, [], 5],

[0, 5, ['mayo'], 0],

[0, 5, ['jelly'], 9],

[0, 5, ['mayo', 'jelly'], 0],

[5, 5, [], 0],

[5, 5, ['mayo'], 0],

[5, 5, ['jelly'], 0],

[5, 5, ['mayo', 'jelly'], 0]],

columns = ['beef', 'pb', 'condiments', 'rating']

)(i) Fit a linear regression model containing all interaction terms. Put the model it in an Overleaf doc.

rating = beta_0

+ beta_1 ( slices beef ) + beta_2 ( tbsp pb ) + beta_3 ( mayo ) + beta_4 ( jelly )

+ beta_5 ( slices beef ) ( tbsp pb ) + beta_6 ( slices beef ) ( mayo ) + beta_7 ( slices beef ) ( jelly )

+ beta_8 ( tbsp pb ) ( mayo ) + beta_9 ( tbsp pb ) ( jelly )

+ beta_10 ( mayo ) ( jelly )(ii) Fit a logistic regression model containing all interaction terms. Whever there is a rating of 0, replace it with 0.1. Put the model in an Overleaf doc.

rating = 10/(1 + exp(

beta_0

+ beta_1 ( slices beef ) + beta_2 ( tbsp pb ) + beta_3 ( mayo ) + beta_4 ( jelly )

+ beta_5 ( slices beef ) ( tbsp pb ) + beta_6 ( slices beef ) ( mayo ) + beta_7 ( slices beef ) ( jelly )

+ beta_8 ( tbsp pb ) ( mayo ) + beta_9 ( tbsp pb ) ( jelly )

+ beta_10 ( mayo ) ( jelly ) ))(iii) Use your models to predict the following ratings. Post your predictions on #results once you've got them.

8 slices of beef + mayo

linear regression rating prediction: ___

logistic regression rating prediction: ___

4 tbsp of pb + jelly

linear regression rating prediction: ___

logistic regression rating prediction: ___

4 tbsp of pb + mayo

linear regression rating prediction: ___

logistic regression rating prediction: ___

4 tbsp of pb + 8 slices of beef + mayo

linear regression rating prediction: ___

logistic regression rating prediction: ___

8 slices of beef + mayo + jelly

linear regression rating prediction: ___

logistic regression rating prediction: ___b. Create a Graph class in your graph repository. This will represent an undirected graph, so instead of parents and children, nodes will merely have neighbors. An edge (a,b) means a is a neighbor of b and b is a neighbor of a. So the order of a and b does not matter.

Implement a method

get_nodes_breadth_firstthat returns the nodes in breadth-first order.Implement a method

get_nodes_depth_firstthat returns the nodes in depth-first order.

These methods will be almost exactly the same as in your Tree class, except it should only consider neighbors that are unvisited and not in the queue. Also, we will need to pass in the index of the starting node.

Note: Originally, I intended for us to write a calc_distance method that works similarly to breadth-first search, but on second thought, we should start by implementing breadth-first and depth-first search in our Graph class since it's slightly different than what we implemented in the Tree class. We'll do calc_distance on the next assignment.

>>> edges = [(0,1),(1,2),(1,3),(3,4),(1,4),(4,5)]

>>> graph = Graph(edges)

the graph looks like this:

0 -- 1 -- 2

| \

3--4 -- 5

>>> bf = graph.get_nodes_breadth_first(2)

>>> [node.index for node in bf]

[2, 1, 0, 3, 4, 5]

note: other breadth-first orders are permissible,

e.g. [2, 1, 4, 3, 0, 5]

here's what's happening under the hood:

queue = [2], visited = []

current_node: 2

unvisited neighbors not in queue: 1

queue = [1], visited = [2]

current_node: 1

unvisited neighbors not in queue: 0, 3, 4

queue = [0, 3, 4], visited = [2, 1]

current_node: 0

unvisited neighbors not in queue: (none)

queue = [3, 4], visited = [2, 1, 0]

current_node: 3

unvisited neighbors not in queue: (none)

queue = [4], visited = [2, 1, 0, 3]

current_node: 4

unvisited neighbors not in queue: 5

queue = [5], visited = [2, 1, 0, 3, 4]

current_node: 5

unvisited neighbors not in queue: (none)

queue = [], visited = [2, 1, 0, 3, 4, 5]

queue is empty, so we stop

>>> df = graph.get_nodes_depth_first(2)

>>> [node.index for node in df]

[2, 1, 3, 4, 5, 0]

note: other depth-first orders are permissible,

e.g. [2, 1, 4, 5, 3, 0]Problem 52-2¶

Supplemental problems; 30% of assignment grade; 60 minutes estimate

Location: assignment-problems

a. Skim the following section of http://learnyouahaskell.com/higher-order-functions.

Maps and filters

Pay attention to the following examples:

>>> map (+3) [1,5,3,1,6]

[4,8,6,4,9]

>>> filter (>3) [1,5,3,2,1,6,4,3,2,1]

[5,6,4]Create a Haskell file SquareSingleDigitNumbers.hs and write a function squareSingleDigitNumbers that takes a list returns the squares of the values that are less than 10.

To check your function, print squareSingleDigitNumbers [2, 7, 15, 11, 5]. You should get a result of [4, 49, 25].

This is a one-liner. If you get stuck for more than 10 minutes, ask for help on Slack.

b. Write a C++ program to calculate the height of a ball that falls from a tower.

- Create a file

constants.hto hold your gravity constant:

#ifndef CONSTANTS_H

#define CONSTANTS_H

namespace myConstants

{

const double gravity(9.8); // in meters/second squared

}

#endif- Create a file

simulateFall.cpp

#include <iostream>

#include "constants.h"

double calculateDistanceFallen(int seconds)

{

// approximate distance fallen after a particular number of seconds

double distanceFallen = myConstants::gravity * seconds * seconds / 2;

return distanceFallen;

}

void printStatus(int time, double height)

{

std::cout << "At " << time

<< " seconds, the ball is at height "

<< height << " meters\n";

}

int main()

{

using namespace std;

cout << "Enter the initial height of the tower in meters: ";

double initialHeight;

cin >> initialHeight;

// your code here

// use calculateDistanceFallen to find the height now

// use calculateDistanceFallen and printStatus

// to generate the desired output

// if the height now goes negative, then the status

// should say that the height is 0 and the program

// should stop (since the ball stops falling at height 0)

return 0;

}Your program should work like this

>>> g++ simulateFall.cpp -o simulateFall

>>> ./simulateFall

Enter the initial height of the tower in meters: 100

At 0 seconds, the ball is at height 100 meters

At 1 seconds, the ball is at height 95.1 meters

At 2 seconds, the ball is at height 80.4 meters

At 3 seconds, the ball is at height 55.9 meters

At 4 seconds, the ball is at height 21.6 meters

At 5 seconds, the ball is at height 0 metersc. Complete these Shell coding challenges and submit screenshots. Each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

Here's a reference to the sort command: https://www.thegeekstuff.com/2013/04/sort-files/

These problems are super quick, so we'll do several.

https://www.hackerrank.com/challenges/text-processing-sort-1/tutorial

https://www.hackerrank.com/challenges/text-processing-sort-2/tutorial

https://www.hackerrank.com/challenges/text-processing-sort-3/tutorial

https://www.hackerrank.com/challenges/text-processing-sort-4/tutorial

d. Complete these SQL coding challenges and submit screenshots. For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

https://sqlzoo.net/wiki/The_JOIN_operation (queries 10, 11)

Problem 52-3¶

Review; 10% of assignment grade; 15 minutes estimate

Now, everyone should have a handful of issues on their repositories. So we'll go back to making 1 issue and resolving 1 issue.

Make 1 GitHub issues on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate.Resolve 1 GitHub issue on one of your own repositories.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Link to Overleaf doc containing your model: _____

Link to file in machine-learning/analysis where you fit

your regressors with dummy variables and interaction terms: ____

Link to graph/tests/test_graph.py: _____

Repl.it link to Haskell code: _____

Repl.it link to C++ code: _____

Link to Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for machine-learning repo: _____

Commit link for graph repo: _____

Commit link for assignment-problems repo: _____

Created issue: _____

Resolved issue: _____Problem 51-1¶

Primary problems; 60% of assignment grade; 60 minutes estimate

a. In your dataframe, write a method create_dummy_variables().

>>> df = DataFrame.from_array(

[[0, 0, [], 1],

[0, 0, ['mayo'], 1],

[0, 0, ['jelly'], 4],

[0, 0, ['mayo', 'jelly'], 0],

[5, 0, [], 4],

[5, 0, ['mayo'], 8],

[5, 0, ['jelly'], 1],

[5, 0, ['mayo', 'jelly'], 0],

[0, 5, [], 5],

[0, 5, ['mayo'], 0],

[0, 5, ['jelly'], 9],

[0, 5, ['mayo', 'jelly'], 0],

[5, 5, [], 0],

[5, 5, ['mayo'], 0],

[5, 5, ['jelly'], 0],

[5, 5, ['mayo', 'jelly'], 0]],

columns = ['beef', 'pb', 'condiments', 'rating']

)

>>> df = df.create_dummy_variables('condiments')

>>> df.columns

['beef', 'pb', 'mayo', 'jelly', 'rating']

>>> df.to_array()

[[0, 0, 0, 0, 1],

[0, 0, 1, 0, 1],

[0, 0, 0, 1, 4],

[0, 0, 1, 1, 0],

[5, 0, 0, 0, 4],

[5, 0, 1, 0, 8],

[5, 0, 0, 1, 1],

[5, 0, 1, 1, 0],

[0, 5, 0, 0, 5],

[0, 5, 1, 0, 0],

[0, 5, 0, 1, 9],

[0, 5, 1, 1, 0],

[5, 5, 0, 0, 0],

[5, 5, 1, 0, 0],

[5, 5, 0, 1, 0],

[5, 5, 1, 1, 0]]b. Suppose that you wish to model a deer population $D(t)$ and a wolf population $W(t)$ over time $t$ (where time is measured in years).

Initially, there are $100$ deer and $10$ wolves.

In the absence of wolves, the deer population would increase at the instantaneous rate of $60\%$ per year.

In the absence of deer, the wolf population would decrease at the instantaneous rate of $90\%$ per year.

The wolves and deer meet at an instantaneous rate of $0.05$ times per wolf per deer per year, and every time a wolf meets a deer, it kills and eats the deer.

The rate at which the wolf population increases is proportional to the number of deer that are killed, by a factor of $0.4.$ In other words, the wolf population grows by a rate of $0.4$ wolves per deer killed per year.

(i) Set up a system of differential equations to model the situation:

\begin{cases} \dfrac{\text{d}D}{\textrm{d}t} = (\_\_\_) D + (\_\_\_) DW, \quad D(0) = \_\_\_ \\ \dfrac{\text{d}W}{\textrm{d}t} = (\_\_\_) W + (\_\_\_) DW, \quad W(0) = \_\_\_ \\ \end{cases}Check your answer: at $t=0,$ you should have $\dfrac{\text{d}D}{\textrm{d}t} = 10$ and $\dfrac{\text{d}W}{\textrm{d}t} = 11.$

IMPORTANT: Don't spend too long setting up your system. If you've spent over 10 minutes on it, and your system doesn't pass the check, then send a screenshot of your system to me and I'll give you some guidance.

Here's some latex for you to use:

$$\begin{cases}

\dfrac{\text{d}D}{\textrm{d}t} = (\_\_\_) D + (\_\_\_) DW, \quad D(0) = \_\_\_ \\

\dfrac{\text{d}W}{\textrm{d}t} = (\_\_\_) W + (\_\_\_) DW, \quad W(0) = \_\_\_ \\

\end{cases}$$(ii) (2 points) Plot the system of differential equations for $0 \leq t \leq 100,$ using a step size $\Delta t = 0.001.$ Put this plot in your Overleaf doc and post it on results.

- Check: Your plot should display oscillations, like a sinusoidal curve.

(iii) Explain why the oscillations arise. What does this mean in terms of the wolf and deer populations? Why does this happen?

Problem 51-2¶

Supplemental problems; 30% of assignment grade; 60 minutes estimate

PART 1¶

Location: assignment-problems

Skim the following section of http://learnyouahaskell.com/recursion.

A few more recursive functions

Pay attention to the following example. take n myList returns the first n entries of myList.

take' :: (Num i, Ord i) => i -> [a] -> [a]

take' n _

| n <= 0 = []

take' _ [] = []

take' n (x:xs) = x : take' (n-1) xsCreate a Haskell file Tail.hs and write a function tail' that takes a list and returns the last n values of the list.

Here's the easiest way to do this...

Write a helper function

reverseListthat reverses a list. This will be a recursive function, which you can define using the following template:reverseList :: [a] -> [a] reverseList [] = (your code here -- base case) reverseList (x:xs) = (your code here -- recursive formula)Here,

xis the first element of the input list andxsis the rest of the elements. For the recursive formula, just callreverseListon the rest of the elements and put the first element of the list at the end. You'll need to use the++operation for list concatenation.Once you've written

reverseListand tested to make sure it works as intended, you can implementtail'by reversing the input list, callingtake'on the reversed list, and reversing the result.

To check your function, print tail' 4 [8, 3, -1, 2, -5, 7]. You should get a result of [-1, 2, -5, 7].

If you get stuck anywhere in this problem, don't spend a bunch of time staring at it. Be sure to post on Slack. These Haskell problems can be tricky if you're not taking the right approach from the beginning, but after a bit of guidance, it can become much simpler.

PART 2¶

Complete these C++/Shell/SQL coding challenges and submit screenshots.

For C++/Shell, each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

C++

https://www.hackerrank.com/challenges/inheritance-introduction/problem

- Guess what? After this problem, we're done with the useful C++ problems on HackerRank. Next time, we'll start some C++ coding in Repl.it. We'll start by re-implementing a bunch of problems that we did when we were first getting used to Python.

Shell

https://www.hackerrank.com/challenges/text-processing-tr-1/problem

https://www.hackerrank.com/challenges/text-processing-tr-2/problem

https://www.hackerrank.com/challenges/text-processing-tr-3/problem

Helpful templates:

$ echo "Hello" | tr "e" "E" HEllo $ echo "Hello how are you" | tr " " '-' Hello-how-are-you $ echo "Hello how are you 1234" | tr -d [0-9] Hello how are you $ echo "Hello how are you" | tr -d [a-e] Hllo how r youMore info on

trhere: https://www.thegeekstuff.com/2012/12/linux-tr-command/These problems are all very quick. If you find yourself spending more than a couple minutes on these, be sure to ask for help.

SQL

https://sqlzoo.net/wiki/The_JOIN_operation (queries 7, 8, 9)

Problem 51-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to GitHub. When you submit your assignment, include a link to your commit(s). If you don't do this, your assignment will receive a grade of $0$ until you resubmit with links to your commits.

Additionally, do the following:

- Make 2 GitHub issues on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate. When you submit your assignment, include the links to the issues you created.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Repl.it link to dataframe tests including create_dummy_variables: _____

Repl.it link to predator_prey_model.py: _____

Link to Overleaf doc: _____

Repl.it link to Haskell code: _____

Link to C++/Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for simulation repo: _____

Commit link for machine-learning repo: _____

Commit link for assignment-problems repo: _____

Issue 1: _____

Issue 2: _____Problem 50-1¶

Primary problems; 60% of assignment grade; 60 minutes estimate

Location: Overleaf and simulation/analysis/sir_model.py

One of the simplest ways to model the spread of disease using differential equations is the SIR model. The SIR model assumes three sub-populations: susceptible, infected, and recovered.

The number of susceptible people $(S)$ decreases at a rate proportional to the rate of meeting between susceptible and infected people (because susceptible people have a chance of catching the disease when they come in contact with infected people).

The number of infected people $(I)$ increases at a rate proportional to the rate of meeting between susceptible and infected people (because susceptible people become infected after catching the disease), and decreases at a rate proportional to the number of infected people (as the diseased people recover).

The number of recovered people $(R)$ increases at a rate proportional to the number of infected people (as the diseased people recover).

a. Write a system of differential equations to model the system. Put your system in an Overleaf doc.

$$\begin{cases} \dfrac{\textrm{d}S}{\textrm{d}t} &= \_\_\_, \quad S(0) = \_\_\_ \\ \dfrac{\textrm{d}I}{\textrm{d}t} &= \_\_\_, \quad I(0) = \_\_\_ \\ \dfrac{\textrm{d}R}{\textrm{d}t} &= \_\_\_, \quad R(0) = \_\_\_ \end{cases}$$Make the following assumptions:

There are initially $1000$ susceptible people and $1$ infected person.

The number of meetings between susceptible and infected people each day is proportional to the product of the numbers of susceptible and infected people, by a factor of $0.01 \, .$ The transmission rate of the disease is $3\%.$ (In other words, $3\%$ of meetings result in transmission.)

Each day, $2\%$ of infected people recover.

Check: If you've written the system correctly, then at $t=0,$ you should have

$$ \dfrac{\textrm{d}S}{\textrm{d}t} = -0.3, \quad \dfrac{\textrm{d}I}{\textrm{d}t} = 0.28, \quad \dfrac{\textrm{d}R}{\textrm{d}t} = 0.02 \, . $$IMPORTANT: Don't spend too long setting up your system. If you've spent over 10 minutes on it, and your system doesn't pass the check, then send a screenshot of your system to me and I'll give you some guidance.

b. Plot the system, post your plot on #results, and include the plot in your Overleaf document.

You get to choose your own step size and interval. Choose a step size small enough that the model doesn't blow up, but large enough that the simulation doesn't take long to run.

Choose an interval that displays all the main features of the differential equation, i.e. an interval that shows the behavior of the curves until they start to asymtpote off.

c. Describe the plot in words, explaining what is happening in the plot and why it is happening.

Problem 50-2¶

Supplemental problems; 30% of assignment grade; 60 minutes estimate

PART 1¶

Location: assignment-problems

Skim the following section of http://learnyouahaskell.com/syntax-in-functions.

Hello recursion

Maximum awesome

Pay attention to the following example, especially:

maximum' :: (Ord a) => [a] -> a

maximum' [] = error "maximum of empty list"

maximum' [x] = x

maximum' (x:xs)

| x > maxTail = x

| otherwise = maxTail

where maxTail = maximum' xsCreate a Haskell file SmallestPositive.hs and write a function findSmallestPositive that takes a list and returns the smallest positive number in the list.

The format will be similar to that shown in the maximum' example above.

To check your function, print findSmallestPositive [8, 3, -1, 2, -5, 7]. You should get a result of 2.

Important: In your function findSmallestPositve, you will need to compare x to 0, which means we must assume that not only can items x be ordered (Ord), they are also numbers (Num). So, you will need to have findSmallestPositive :: (Num a, Ord a) => [a] -> a.

Note: It is not necessary to put a "prime" at the end of your function name, like is shown in the example.

PART 2¶

Complete these C++/Shell/SQL coding challenges and submit screenshots.

For C++/Shell, each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

C++

https://www.hackerrank.com/challenges/c-tutorial-class/problem

You can read more about C++ classes here: https://www.programiz.com/cpp-programming/object-class

If you get stuck for more than 20 minutes, post on Slack to get help

Shell

https://www.hackerrank.com/challenges/text-processing-tail-1/problem

https://www.hackerrank.com/challenges/text-processing-tail-2/problem

https://www.hackerrank.com/challenges/text-processing-in-linux---the-middle-of-a-text-file/problem

Helpful templates:

tail -n 11 # Last 11 lines tail -c 20 # Last 20 characters head -n 10 | tail -n 5 # Get the first 10 lines, and then get the last 5 lines of those 10 lines (so the final result is lines 6-10)These problems are all one-liners. If you find yourself spending more than a couple minutes on these, be sure to ask for help.

SQL

https://sqlzoo.net/wiki/The_JOIN_operation (queries 4,5,6)

Problem 50-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to GitHub. When you submit your assignment, include a link to your commit(s). If you don't do this, your assignment will receive a grade of $0$ until you resubmit with links to your commits.

Additionally, do the following:

- Make 2 GitHub issues on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate. When you submit your assignment, include the links to the issues you created.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Repl.it link to sir_model.py: _____

Link to Overleaf doc: _____

Repl.it link to Haskell code: _____

Link to C++/Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for simulation repo: _____

Commit link for assignment-problems repo: _____

Issue 1: _____

Issue 2: _____Problem 50-4¶

There will be a 45-minute quiz on Friday from 9:15-10. Know how to do the following things:

List the nodes of a graph in breadth-first and depth-first orders

Fill in code tempates for breadth-first search and depth-first search

Answer conceptual questions about similarities and differences between linear and logistic regression and interaction terms

Problem 49-1¶

Primary problems; 60% of assignment grade; 60 minutes estimate

a. Refactor your Tree class so that each node has an index as well as a value, the edges are given in terms of node indices, and the values are given as a list. Update your tests as well.

TEST CASE 1

>>> node_values = ['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k']

This means that the nodes will be as follows:

- the node with index 0 will have value 'a'

- the node with index 1 will have value 'b'

- the node with index 2 will have value 'c'

- the node with index 3 will have value 'd'

- the node with index 4 will have value 'e'

- the node with index 5 will have value 'f'

- the node with index 6 will have value 'g'

- the node with index 7 will have value 'h'

- the node with index 8 will have value 'i'

- the node with index 9 will have value 'j'

- the node with index 10 will have value 'k'

>>> edges = [(0,2), (4,6), (4,8), (4,0), (3,1), (0,3), (3,5), (5,7), (3,9), (3,10)]

Note: now, we're phrasing the edges in terms of

node indices instead of node values.

The corresponding values would be the ones

we're already using in our tests:

[('a','c'), ('e','g'), ('e','i'), ('e','a'), ('d','b'), ('a','d'), ('d','f'), ('f','h'), ('d','j'), ('d','k')]

>>> tree = Tree(edges, node_values)

>>> tree.build_from_edges()

The indices of the nodes are as follows:

4

/|\

0 8 6

/|

2 3__

/|\ \

1 9 5 10

|

7

The values of the nodes are as follows:

e

/|\

a i g

/|

c d

/|\\

b j fk

|

h

>>> tree.root.value == 'e'

>>> tree.root.index == 4

Note: the following tests use sets {} rather than lists [].

This way, you don't have to worry about order.

>>> children = set(tree.root.children)

>>> grandchildren = set([])

>>> for child in children:

grandchildren = grandchildren.union(set(child.children))

>>> great_grandchildren = set([])

>>> for grandchild in grandchildren:

great_grandchildren = great_grandchildren.union(set(grandchild.children))

>>> great_great_grandchildren = set([])

>>> for great_grandchild in great_grandchildren:

great_great_grandchildren = great_great_grandchildren.union(set(great_grandchild.children))

>>> {node.index for node in children}

{0, 8, 6}

>>> {node.value for node in children}

{'a', 'i', 'g'}

>>> {node.index for node in grandchildren}

{2, 3}

>>> {node.value for node in grandchildren}

{'c', 'd'}

>>> {node.index for node in great_grandchildren}

{1, 9, 5, 10}

>>> {node.value for node in great_grandchildren}

{'b', 'j', 'f', 'k'}

>>> {node.index for node in great_great_grandchildren}

{7}

>>> {node.value for node in great_great_grandchildren}

{'h'}TEST CASE 2

>>> node_values = ['a', 'b', 'a', 'a', 'a', 'b', 'a', 'b', 'a', 'b', 'b']

This means that the nodes will be as follows:

- the node with index 0 will have value 'a'

- the node with index 1 will have value 'b'

- the node with index 2 will have value 'a'

- the node with index 3 will have value 'a'

- the node with index 4 will have value 'a'

- the node with index 5 will have value 'b'

- the node with index 6 will have value 'a'

- the node with index 7 will have value 'b'

- the node with index 8 will have value 'a'

- the node with index 9 will have value 'b'

- the node with index 10 will have value 'b'

>>> edges = [(0,2), (4,6), (4,8), (4,0), (3,1), (0,3), (3,5), (5,7), (3,9), (3,10)]

>>> tree = Tree(edges, node_values)

>>> tree.build_from_edges()

The indices of the nodes are as follows:

4

/|\

0 8 6

/|

2 3__

/|\ \

1 9 5 10

|

7

The values of the nodes are as follows:

a

/|\

a a a

/|

a a

/|\\

b b bb

|

b

>>> tree.root.value == 'a'

>>> tree.root.index == 4

>>> children = set(tree.root.children)

>>> grandchildren = set([])

>>> for child in children:

grandchildren = grandchildren.union(set(child.children))

>>> great_grandchildren = set([])

>>> for grandchild in grandchildren:

great_grandchildren = great_grandchildren.union(set(grandchild.children))

>>> great_great_grandchildren = set([])

>>> for great_grandchild in great_grandchildren:

great_great_grandchildren = great_great_grandchildren.union(set(great_grandchild.children))

>>> {node.index for node in children}

{0, 8, 6}

>>> {node.value for node in children}

{'a', 'a', 'a'}

>>> {node.index for node in grandchildren}

{2, 3}

>>> {node.value for node in grandchildren}

{'a', 'a'}

>>> {node.index for node in great_grandchildren}

{1, 9, 5, 10}

>>> {node.value for node in great_grandchildren}

{'b', 'b', 'b', 'b'}

>>> {node.index for node in great_great_grandchildren}

{7}

>>> {node.value for node in great_great_grandchildren}

{'b'}b. In your LogisticRegressor, include an input upper_bound that represents the upper bound of the logistic function:

Note that you will have to update your calculation of y_transformed accordingly:

c. Use your LogisticRegressor to fit the sandwich dataset with interaction terms. Note that any ratings of 0 need to be changed to a small positive number, say 0.1, for the logistic regressor to be able to fit the data.

>>> df = DataFrame.from_array(

[[0, 0, 1],

[1, 0, 2],

[2, 0, 4],

[4, 0, 8],

[6, 0, 9],

[0, 2, 2],

[0, 4, 5],

[0, 6, 7],

[0, 8, 6],

[2, 2, 0.1],

[3, 4, 0.1]]

columns = ['beef', 'pb', 'rating']

)Your logistic regression should take the form

$$ \text{rating} = \dfrac{10}{1 + \exp\Big( \beta_0 + \beta_1 \times (\text{beef}) + \beta_2 \times (\text{pb}) + \beta_3 \times (\text{beef})(\text{pb}) \Big) }$$(i) State your logistic regression model in your Overleaf document, and post it on #results once you've got it.

(ii) Use your model to predict the rating of a sandwich with $5$ slices of roast beef and no peanut butter. State the prediction in your Overleaf document and post it on #results.

(iii) Use your model to predict the rating of a sandwich with $12$ slices of roast beef. State the prediction in your Overleaf document and post it on #results.

(iv) Use your model to predict the rating of a sandwich with $5$ slices of roast beef AND $5$ tablespoons of peanut butter (both ingredients on the same sandwich). State the prediction in your Overleaf document and post it on #results.

Problem 49-2¶

Supplemental problems; 30% of assignment grade; 60 minutes estimate

PART 1¶

Location: assignment-problems

Skim the following section of http://learnyouahaskell.com/syntax-in-functions.

Let it be

Pay attention to the following example, especially:

calcBmis :: (RealFloat a) => [(a, a)] -> [a]

calcBmis xs = [bmi | (w, h) <- xs, let bmi = w / h ^ 2, bmi >= 25.0]Create a Haskell file ProcessPoints.hs and write a function smallestDistances that takes a list of 3-dimensional points and returns the distances of any points that are within 10 units from the origin.

To check your function, print smallestDistances [(5,5,5), (3,4,5), (8,5,8), (9,1,4), (11,0,0), (12,13,14)]. You should get a result of [8.67, 7.07, 9.90].

- Note: The given result is shown to 2 decimal places. You don't have to round your result. I just didn't want to list out all the digits in the test.

PART 2¶

Complete these C++/Shell/SQL coding challenges and submit screenshots.

For C++/Shell, each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

C++

https://www.hackerrank.com/challenges/c-tutorial-struct/problem

You can read more about structs here: https://www.educative.io/edpresso/what-is-a-cpp-struct

If you get stuck for more than 10 minutes, post on Slack to get help

Shell

https://www.hackerrank.com/challenges/text-processing-cut-7/problem

https://www.hackerrank.com/challenges/text-processing-cut-8/problem

https://www.hackerrank.com/challenges/text-processing-cut-9/problem

https://www.hackerrank.com/challenges/text-processing-head-1/problem

https://www.hackerrank.com/challenges/text-processing-head-2/tutorial

Remember to check out the tutorial tabs.

Note that if you want to start at the index

2and then go until the end of a line, you can just omit the ending index. For example,cut -c2-means print characters $2$ and onwards for each line in the file.Also remember the template

cut -d',' -f2-4, which means print fields $2$ through $4$ for each line the file, where the fields are separated by the delimiter','.You can also look at this resource for some examples: https://www.folkstalk.com/2012/02/cut-command-in-unix-linux-examples.html

These problems are all one-liners. If you find yourself spending more than a couple minutes on these, be sure to ask for help.

SQL

https://sqlzoo.net/wiki/SUM_and_COUNT (queries 6,7,8)

https://sqlzoo.net/wiki/The_JOIN_operation (queries 1,2,3)

Problem 49-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to GitHub. When you submit your assignment, include a link to your commit(s). If you don't do this, your assignment will receive a grade of $0$ until you resubmit with links to your commits.

Additionally, do the following:

Make 2 GitHub issues on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate. When you submit your assignment, include the links to the issues you created.~Resolve an issue that has been made on your own GitHub repository. When you submit your assignment, include a link to the issue you resolved. (If you don't have any issues on any of your repositories, then you don't have to do anything, but state that this is the case when you turn in your assignment.)~ Let's actually hold off on this bit for the next couple weeks, so that we can build up an inventory of issues on our repositories. Then, once we have an inventory of 5-10 issues to choose from each time, we can start resolving them.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Repl.it link to Tree tests: _____

Repl.it link to Haskell code: _____

Link to Overleaf doc for logistic regression: _____

Link to C++/Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for graph repo: _____

Commit link for assignment-problems repo: _____

Commit link for machine-learning repo: _____

Issue 1: _____

Issue 2: _____Problem 48-1¶

Primary problems; 60% of assignment grade; 60 minutes estimate

a. Previously, we've fit a couple logistic regressions by hand (see Problems 33-1 and 37-2). Now, you will write a class LogisticRegressor that constructs a logistic regression.

You can import your LinearRegressor to take care of most of the processing. All you have to do in your LogisticRegressor is:

Initialize the

LogisticRegressorin the same way as theLinearRegressorTransform the independent variable using $y' = \ln \left( \dfrac{1}{y} - 1 \right)$

Compute your coefficients $\beta_i$ by fitting a linear regression $y' = \sum \beta_i x_i$

When you

predict, plug your coefficients into the sigmoid function $y = \dfrac{1}{1+ e^{\sum \beta_i x_i} }$

Here is an example:

>>> df = DataFrame.from_array(

[[1,0.2],

[2,0.25],

[3,0.5]],

columns = ['x','y']

)

>>> log_reg = LogisticRegressor(df, dependent_variable = 'y')

>>> log_reg.predict({'x': 5})

0.777

Here's a walkthrough to help you debug:

1. Start with initial dataframe:

'x','y'

[[1,0.2],

[2,0.25],

[3,0.5]]

2. Transform the independent variable:

'x','y_transformed'

[[1,1.386],

[2,1.099],

[3,0]]

3. Fit a linear regression:

y_transformed = 2.215 - 0.693 * x

4. Make predictions using the sigmoid model:

y = 1/(1 + e^(y_transformed) )

= 1/(1 + e^(2.215 - 0.693 * x) )

5. For example, when x=5, your prediction would be

y = 1/(1 + e^(2.215 - 0.693 * 5) )

= 0.777b.

Here is some additional information about the Space Empires game. Also, I've got some more questions at the end. (Note: I've written a lot, but this is really just a 10-minute problem)

There are a couple additional rules:

In order to build a ship, not only must you have enough CPs and shipyards, but you must also have the necessary shipsize technology.

The combat order is constructed according to ships' tactics level: ships with tactics

0are destroyed immediately, and ships with higher tactics fire first. If two ships have the same tactics, then the defending ship fires first (the defending ship is the ship that was the first to occupy the grid space).Previously, I said that the maintenance cost is equal to the hullsize. This is usually true, but there are some special types of ships (Decoy, Colonyship, Base) that don't have a maintenance cost.

Ships have the following attributes:

cp_cost- the number of CPs required to build the shiphullsize- the number of shipyards needed to build the ship (assuming shipyard technology level 1)shipsize_needed- the level of shipsize technology required to build thetactics- determines the combat order; ships with tactics0are destroyed immediatelyattackanddefense- as usualmaintenance- the number of CPs that must be paid during each Economic phase to retain the ship

'unit_data': {

'Battleship': {'cp_cost': 20, 'hullsize': 3, 'shipsize_needed': 5, 'tactics': 5, 'attack': 5, 'defense': 2, 'maintenance': 3},

'Battlecruiser': {'cp_cost': 15, 'hullsize': 2, 'shipsize_needed': 4, 'tactics': 4, 'attack': 5, 'defense': 1, 'maintenance': 2},

'Cruiser': {'cp_cost': 12, 'hullsize': 2, 'shipsize_needed': 3, 'tactics': 3, 'attack': 4, 'defense': 1, 'maintenance': 2},

'Destroyer': {'cp_cost': 9, 'hullsize': 1, 'shipsize_needed': 2, 'tactics': 2, 'attack': 4, 'defense': 0, 'maintenance': 1},

'Dreadnaught': {'cp_cost': 24, 'hullsize': 3, 'shipsize_needed': 6, 'tactics': 5, 'attack': 6, 'defense': 3, 'maintenance': 3},

'Scout': {'cp_cost': 6, 'hullsize': 1, 'shipsize_needed': 1, 'tactics': 1, 'attack': 3, 'defense': 0, 'maintenance': 1},

'Shipyard': {'cp_cost': 3, 'hullsize': 1, 'shipsize_needed': 1, 'tactics': 3, 'attack': 3, 'defense': 0,, 'maintenance': 0},

'Decoy': {'cp_cost': 1, 'hullsize': 0, 'shipsize_needed': 1, 'tactics': 0, 'attack': 0, 'defense': 0, 'maintenance': 0},

'Colonyship': {'cp_cost': 8, 'hullsize': 1, 'shipsize_needed': 1, 'tactics': 0, 'attack': 0, 'defense': 0, 'maintenance': 0},

'Base': {'cp_cost': 12, 'hullsize': 3, 'shipsize_needed': 2, 'tactics': 5, 'attack': 7, 'defense': 2, 'maintenance': 0},

}Here are the specifics regarding technology:

attack,defense- determines the amount that gets added to a ship's attack or defense during battleshipsize- determines what kinds of ships can be built (provided you have enough CP and shipyards)

Level | Upgrade Cost | Benefit

----------------------------------------------------------------------

1 | - | Can build Scout, Colony Ship, Ship Yard, Decoy

2 | 10 | Can build Destroyer, Base

3 | 15 | Can build Cruiser

4 | 20 | Can build Battlecruiser

5 | 25 | Can build Battleship

6 | 30 | Can build Dreadnaughtmovement- determines how many spaces each ship can move during each movement phase

Level | Upgrade Cost | Benefit

---------------------------------------------------------

1 | - | Can move one space per movement

2 | 20 | Can move 1 space in each of the

first 2 movements and 2 spaces in

the third movement

3 | 30 | Can move 1 space in the first movement

and 2 spaces in each of the second and

third movements

4 | 40 | Can move 2 spaces per movement

5 | 40 | Can move 2 spaces in each of the first 2

movements and 3 spaces in the third movement

6 | 40 | Can move 2 spaces in the first movement and 3

spaces in each of the second and third movementsshipyard- determines how much "hull size" each shipyard can build

Level | Upgrade Cost | Hull Size Building Capacity of Each Ship Yard

------------------------------------------------------------

1 | - | 1

2 | 20 | 1.5

3 | 30 | 2The information is summarized as follows:

'technology_data': {

'shipsize':

{'upgrade_cost': [10, 15, 20, 25, 30],

'starting_level': 1},

'attack':

{'upgrade_cost': [20, 30, 40],

'starting_level': 0},

'defense':

{'upgrade_cost': [20, 30, 40],

'starting_level': 0},

'movement':

{'upgrade_cost': [20, 30, 40, 40, 40],

'starting_level': 1},

'shipyard':

{'upgrade_cost': [20, 30],

'starting_level': 1}

}Questions - put your answers in your Overleaf doc

If a player has 30 CP and 2 Shipyards at its home colony (with Shipyard tech level 1), how many Scouts can it buy?

Who would win in combat -- a Colonyship or a Scout?

A Battleship and a Battlecruiser are in combat. Which ship attacks first?

Two Scouts are in combat. How do you determine which Scout attacks first?

Suppose you have 1000 CP and 4 shipyards. If you upgrade Shipyard technology to the max, how many Scouts could you build?

Problem 48-2¶

Supplemental problems; 30% of assignment grade; 60 minutes estimate

PART 1¶

Location: assignment-problems

Observe the following example:

bmiTell :: (RealFloat a) => a -> a -> String

bmiTell weight height

| bmi <= underweightThreshold = "The patient may be underweight. If this is the case, the patient should be recommended a higher-calorie diet."

| bmi <= normalThreshold = "The patient may be at a normal weight."

| otherwise = "The patient may be overweight. If this is the case, the patient should be recommended exercise and a lower-calorie diet."

where bmi = weight / height ^ 2

underweightThreshold = 18.5

normalThreshold = 25.0Create a Haskell file RecommendClothing.hs and write a function recommendClothing that takes the input degreesCelsius, converts it to degreesFahrenheit (multiply by $\dfrac{9}{5}$ and add $32$), and makes the following recommendations:

If the temperature is $ \geq 80 \, ^\circ \textrm{F},$ then recommend to wear a shortsleeve shirt.

If the temperature is $ > 65 \, ^\circ \textrm{F}$ but $ < 80 \, ^\circ \textrm{F},$ then recommend to wear a longsleeve shirt.

If the temperature is $ > 50 \, ^\circ \textrm{F}$ but $ < 65 \, ^\circ \textrm{F},$ then recommend to wear a sweater.

If the temperature is $ \leq 50 \, ^\circ \textrm{F},$ then recommend to wear a jacket.

PART 2¶

Complete these C++/Shell/SQL coding challenges and submit screenshots.

For C++/Shell, each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

C++

https://www.hackerrank.com/challenges/c-tutorial-strings/problem

- Note that you can slice strings like this:

myString.substr(1, 3)

Shell

https://www.hackerrank.com/challenges/text-processing-cut-2/problem

https://www.hackerrank.com/challenges/text-processing-cut-3/problem

https://www.hackerrank.com/challenges/text-processing-cut-4/problem

https://www.hackerrank.com/challenges/text-processing-cut-5/problem

https://www.hackerrank.com/challenges/text-processing-cut-6/problem

Here are some useful templates:

cut -c2-4means print characters $2$ through $4$ for each line in the file.cut -d',' -f2-4means print fields $2$ through $4$ for each line the file, where the fields are separated by the delimiter','.

You can also look at this resource for some examples: https://www.folkstalk.com/2012/02/cut-command-in-unix-linux-examples.html

These problems are all one-liners. If you find yourself spending more than a couple minutes on these, be sure to ask for help.

SQL

https://sqlzoo.net/wiki/SUM_and_COUNT (queries 1,2,3,4,5)

Problem 48-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to GitHub. When you submit your assignment, include a link to your commit(s). If you don't do this, your assignment will receive a grade of $0$ until you resubmit with links to your commits.

Additionally, do the following:

Make 2 GitHub issues on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate. When you submit your assignment, include the links to the issues you created.~Resolve an issue that has been made on your own GitHub repository. When you submit your assignment, include a link to the issue you resolved. (If you don't have any issues on any of your repositories, then you don't have to do anything, but state that this is the case when you turn in your assignment.)~ Let's actually hold off on this bit for the next couple weeks, so that we can build up an inventory of issues on our repositories. Then, once we have an inventory of 5-10 issues to choose from each time, we can start resolving them.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Link to logistic regressor: _____

Link to logistic regressor test: _____

Link to Overleaf doc containing responses to Space Empires rules questions: _____

Repl.it link to Haskell code: _____

Link to C++/Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for machine-learning repo: _____

Commit link for assignment-problems repo: _____

Issue 1: _____

Issue 2: _____Problem 47-1¶

Primary problems; 50% of assignment grade; 60 minutes estimate

a.

(i) In your DataFrame, write a method create_interaction_terms that takes in the names of 2 columns and then creates another column which contains the products of the values of those columns.

>>> df = DataFrame.from_array(

[[0, 0, 1],

[1, 0, 2],

[2, 0, 4],

[4, 0, 8],

[6, 0, 9],

[0, 2, 2],

[0, 4, 5],

[0, 6, 7],

[0, 8, 6],

[2, 2, 0],

[3, 4, 0]]

columns = ['beef', 'pb', 'rating']

)

>>> df = df.create_interaction_terms('beef', 'pb')

>>> df.columns

['beef', 'pb', 'rating', 'beef * pb']

>>> df.to_array()

[[0, 0, 1, 0],

[1, 0, 2, 0],

[2, 0, 4, 0],

[4, 0, 8, 0],

[6, 0, 9, 0],

[0, 2, 2, 0],

[0, 4, 5, 0],

[0, 6, 7, 0],

[0, 8, 6, 0],

[2, 2, 0, 4],

[3, 4, 0, 12]](ii) Fit a linear regression on the dataset above.

$$ \text{rating} = \beta_0 + \beta_1 \times (\text{beef}) + \beta_2 \times (\text{pb}) + \beta_3 \times (\text{beef})(\text{pb})$$State this model in your Overleaf document, and post it on #results once you've got it.

(iii) Use your model to predict the rating of a sandwich with $5$ slices of roast beef and no peanut butter. State the prediction in your Overleaf document.

(iv) Use your model to predict the rating of a sandwich with $5$ slices of roast beef AND $5$ tablespoons of peanut butter (both ingredients on the same sandwich). State the prediction in your Overleaf document.

(v) Look back at your answers to (iii) and (iv). Can both predictions be trusted now?

b.

In the near future, we're going to start building a game called Space Empires. This project will serve several purposes:

It's going to be very fun -- we're going to develop intelligent game-playing agents (i.e. the software version of autonomous robots) and have them play against each other.

It's going to give you practice organizing, writing, and debugging code that's spread over multiple folders and files.

It's going to provide a real use-case for all the algorithms and machine learning stuff we have been doing and have yet to do.

For now, I just want you to get acquainted with the rules of the game. I will tell you some rules of the game, and I'll ask you some questions afterwards.

There are 2 players on a $7 \times 7$ grid. Each player starts on their home Planet with 1 Colony and 4 Shipyards on that Planet, as well as a fleet of 3 Scouts and 3 Colonyships. The players also have 0 Construction Points (CPs) to begin with.

Scouts and Colonyships each have several attributes: CP cost (i.e. the number of CPs needed to build the ship), hull size, attack class, attack strength, defense strength, attack technology level, defense technology level, health level. Regardless of the number needed to hit, a roll of 1 will always score a hit.

On each turn, there 3 phases: economic, movement, and combat.

Economic phase

During the economic phase, each player gets 20 Construction Points (CPs) from the Colony on their home Planet, as well as 5 CPs from any other colonies ("other colonies" will be defined in a later rule). However, each player must pay a maintenance cost (in CPs) for each ship. The maintenance cost of a ship is equal to the hull size of the ship, and if a player is unable to pay a maintenance cost, it must remove the ship.

A player can also build ships with any CPs it has remaining, but the ships must be built at a planet with one or more Shipyards, and the sum of the hull sizes of the ships built at a planet cannot exceed the number of Shipyards at that planet.

Movement

- The movement phase consists of 3 rounds of movement. During each round of movement, each player can move each ship by one square in any direction. If a Colonyship lands on a planet, then it can "colonize" the planet by turning into a Colony.

Combat phase

During the combat phase, a combat occurs at each square containing ships from both players. Each combat proceeds in rounds until only one player's ships remain at that spot.

Each round of combat starts with "ship screening", in which a player with more ships is given the opportunity to remove its ships from the combat round (but the number of ships that are left in combat must be at least the number of ships that the opponent has in that square).

Then, a "combat order" is constructed, in which ships are sorted by their attack class. The first ship in the combat order can attack any other ship. A 10-sided die is rolled, and if the attacker's (attack strength + attack technology) minus the defender's (defense strength + defense technology) is less than or equal to the die roll, then a hit is scored. Once a ship sustains a number of hits equal to its hull size, it is destroyed.

The above procedure is repeated for each ship in the combat order. Then, if there are still ships from both teams left over, another round of combat begins. Combat continues until only one team's ships occupy the square.

Questions - put your answers in your Overleaf doc

If a player is unable to pay the maintenance cost for one of it ships, what must the player do?

Even if a player has a lot of CPs, that doesn't necessarily mean it can build a lot of ships on a single turn. Why not?

How many spaces, in total, can a player move a ship during a turn? (Remember that the movement phase consists of multiple rounds of movement)

If Player A has 5 ships and Player B has 3 ships in the same square, up to how many ships can Player A screen from combat?

Is it possible for any of the losing player's ships to survive a combat?

Problem 47-2¶

Supplemental problems; 40% of assignment grade; 60 minutes estimate

PART 1¶

Location: assignment-problems/refactor_string_processing.py

The following code is supposed to turn a string into an array. Currently, it's messy, and there's some subtle issues with the code. Clean up the code and get it to work.

Some particular things to fix are:

Putting whitespace where appropriate

Naming variables clearly

Deleting any pieces of code that aren't necessary

string = '"alpha","beta","gamma","delta"\n1,2,3,4\n5.0,6.0,7.0,8.0'

strings = [x.split(',') for x in string.split('\n')]

length_of_string = len(string)

arr = []

for string in strings:

newstring = []

if len(string) > 0:

for char in string:

if char[0]=='"' and char[-1]=='"':

char = char[1:]

elif '.' in char:

char = int(char)

else:

char = float(char)

newstring.append(char)

arr.append(newstring)

print(arr)

---

What it should print:

[['alpha', 'beta', 'gamma', 'delta'], [1, 2, 3, 4], [5.0, 6.0, 7.0, 8.0]]

What actually happens:

Traceback (most recent call last):

File "datasets/myfile.py", line 10, in <module>

char = int(char)

ValueError: invalid literal for int() with base 10: '5.0'PART 2¶

Location: assignment-problems

Skim the following section of http://learnyouahaskell.com/syntax-in-functions.

Pattern matching

Create Haskell file Fibonacci.hs and write a function nthFibonacciNumber that computes the nth Fibonacci number, starting with $n=0$. Remember that the Fibonacci sequence is $0,1,1,2,3,5,8,\ldots$ where each number comes from adding the previous two.

To check your function, print nthFibonacciNumber 20. You should get a result of 6765.

Note: This part of the section will be very useful, since it talks about how to write a recursive function.

factorial :: (Integral a) => a -> a

factorial 0 = 1

factorial n = n * factorial (n - 1)PART 3¶

Complete these C++/Shell/SQL coding challenges and submit screenshots.

For C++/Shell, each screenshot should include your username, the problem title, and the "Status: Accepted" indicator.

For SQL, each screenshot should include the problem number, the successful smiley face, and your query.

C++

https://www.hackerrank.com/challenges/arrays-introduction/problem

- Note that when the input is in the form of numbers separated by a space, you can read it into an array:

You can read the array out in a similar way.for (int i=0; i<n; i++) { cin >> a[i]; }

Shell

https://www.hackerrank.com/challenges/text-processing-cut-1/problem

- Tip: for the this problem, you can read input lines from a file using the following syntax:

Again, be sure to check out the top-right "Tutorial" tab.while read line do (your code here) done

SQL

https://sqlzoo.net/wiki/SELECT_within_SELECT_Tutorial (queries 9,10)

Problem 47-3¶

Review; 10% of assignment grade; 15 minutes estimate

Commit your code to GitHub. When you submit your assignment, include a link to your commit(s). If you don't do this, your assignment will receive a grade of $0$ until you resubmit with links to your commits.

Additionally, do the following:

Make 2 GitHub issues on your assigned classmate's repository (but NOT

assignment-problems). See eurisko.us/resources/#code-reviews to determine your assigned classmate. When you submit your assignment, include the links to the issues you created.~Resolve an issue that has been made on your own GitHub repository. When you submit your assignment, include a link to the issue you resolved. (If you don't have any issues on any of your repositories, then you don't have to do anything, but state that this is the case when you turn in your assignment.)~ Let's actually hold off on this bit for the next couple weeks, so that we can build up an inventory of issues on our repositories. Then, once we have an inventory of 5-10 issues to choose from each time, we can start resolving them.

SUBMISSION TEMPLATE¶

For your submission, copy and paste your links into the following template:

Link to overleaf doc: _____

Repl.it link to Haskell code: _____

Link to C++/Shell/SQL screenshots (Overleaf or Google Doc): _____

Commit link for machine-learning repo: _____

Commit link for assignment-problems repo: _____

Issue 1: _____

Issue 2: _____Problem 46-1¶

Primary problem; 45% of assignment grade; 60 minutes estimate

Location: machine-learning/analysis/8_queens.py

We're going to be exploring approaches to solving the 8-queens problem on the next couple assignments.

The 8-queens problem is a challenge to place 8 queens on a chess board in a way that none can attack each other. Remember that in chess, queens can attack any piece that is on the same row, column, or diagonal. So, the 8-queens problem is to place 8 queens on a chess board so that none of them are on the same row, column, or diagonal.

a. Write a function show_board(locations) that takes a list of locations of 8 queens and prints out the corresponding board by placing periods in empty spaces and the index of the location in any space occupied by a queen.

>>> locations = [(0,0), (6,1), (2,2), (5,3), (4,4), (7,5), (1,6), (2,6)]

>>> show_board(locations)

0 . . . . . . .

. . . . . . 6 .

. . 2 . . . 7 .

. . . . . . . .

. . . . 4 . . .

. . . 3 . . . .

. 1 . . . . . .

. . . . . 5 . .Tip: To print out a row, you can first construct it as an array and then print the corresponding string, which consists of the array entries separated by two spaces:

>>> row_array = ['0', '.', '.', '.', '.', '.', '.', '.']

>>> row_string = ' '.join(row_array) # note that ' ' is TWO spaces

>>> print(row_string)

0 . . . . . . .b. Write a function that calc_cost(locations) computes the "cost", i.e. the number of pairs of queens that are on the same row, column, or diagonal.

For example, in the board above, the cost is 10:

- Queen 2 and queen 7 are on the same row

- Queen 6 and queen 7 are on the same column

- Queen 0 and queen 2 are on the same diagonal

- Queen 0 and queen 4 are on the same diagonal

- Queen 2 and queen 4 are on the same diagonal

- Queen 3 and queen 4 are on the same diagonal

- Queen 4 and queen 7 are on the same diagonal

- Queen 3 and queen 7 are on the same diagonal

- Queen 1 and queen 6 are on the same diagonal

- Queen 3 and queen 5 are on the same diagonal

Verify that the cost of the above configuration is 10:

>>> calc_cost(locations)

10Tip 1: It will be easier to debug your code if you write several helper functions -- one which takes two coordinate pairs and determines whether they're on the same row, another which determines whether they're on the same column, another which determines if they're on the same diagonal.

Tip 2: To check if two locations are on the same diagonal, you can compute the slope between those two points and check if the slope comes out to $1$ or $-1.$

c. Write a function random_optimizer(n) that generates n random locations arrays for the 8 queens, and returns the following dictionary:

{

'locations': array that resulted in the lowest cost,

'cost': the actual value of that lowest cost

}Then, print out the cost of your random_optimizer for n=10,50,100,500,1000. Once you have those printouts, post it on Slack in the #results channel.

Problem 46-2¶

Supplemental problems; 45% of assignment grade; 60 minutes estimate

PART 1¶

Location: assignment-problems/refactor_linear_regressor.py

The following code is taken from a LinearRegressor class. While most of the code will technically work, there may be a couple subtle issues, and the code is difficult to read.

Refactor this code so that it is more readable. It should be easy to glance at and understand what's going on. Some particular things to fix are:

Putting whitespace where appropriate

Naming variables clearly

Expanding out complicated one-liners

Deleting any pieces of code that aren't necessary

Important:

You don't have to actually run the code. This is just an exercise in improving code readability. You just need to copy and paste the code below into a file and clean it up.

Don't spend more than 20 min on this problem. You should fix the things that jump out at you as messy, but don't worry about trying to make it absolutely perfect.

def calculate_coefficients(self):

final_dict = {}

mat = [[1 for x in list(self.df.data_dict.values())[0][0]]]

mat_dict = {}

for key in self.df.data_dict:

if key != self.dependent_variable:

mat_dict[key] = self.df.data_dict[key]

for row in range(len(mat_dict)):

mat.append(list(self.df.data_dict.values())[row][0])

mat = Matrix(mat)

mat = mat.transpose()

mat_t = mat.transpose()

mat_mult = mat_t.matrix_multiply(mat)

mat_inv = mat_mult.inverse()

mat_pseudoinv = mat_inv.matrix_multiply(mat_t)

multiplier = [[num] for num in list(self.df.data_dict.values())[1][0]]

multiplier_mat = mat_pseudoinv.matrix_multiply(Matrix(multiplier))

for num in range(len(multiplier_mat.elements)):

if num == 0:

key = 'constant'

else:

key = list(self.df.data_dict.keys())[num-1]