Final 2¶

Date: 6/2

Time: 120 min

SUPER IMPORTANT: Part 3 (implementations) will take the most time and is worth the most points, so go through parts 1 and 2 quickly answering everything that you know how to do, and then go to part 3, and then come back to any questions from parts 1 and 2 that stumped you.

Part 1 (20 points)¶

True/False (2.5 points each). Determine whether the following statements are true or false. If false, then explain why with a single sentence. If true, then you don't have to write any additional justification.

Whenever we update the cluster centers in the k-means algorithm, they always change by some amount. They never stay exactly the same as what they were previously.

If you have sufficiently many data points for the function $f(x) = ax + x^b,$ you can use linear regression to find the missing parameters.

If you have sufficiently many data points for the function $f(x) = \dfrac{1}{\sqrt{ax}} + e^{x-b},$ you can use linear regression to find the missing parameters.

In $k$-fold cross validation, the model is repeatedly trained on $\dfrac{1}{k}$ of the records in the data and tested on $\dfrac{k-1}{k}$ records in the data.

Leave-one-out cross validation is a special case of $k$-fold cross validation.

In the predator-prey model, the two populations oscillate with different frequencies.

In the SIR model, there are no interaction terms.

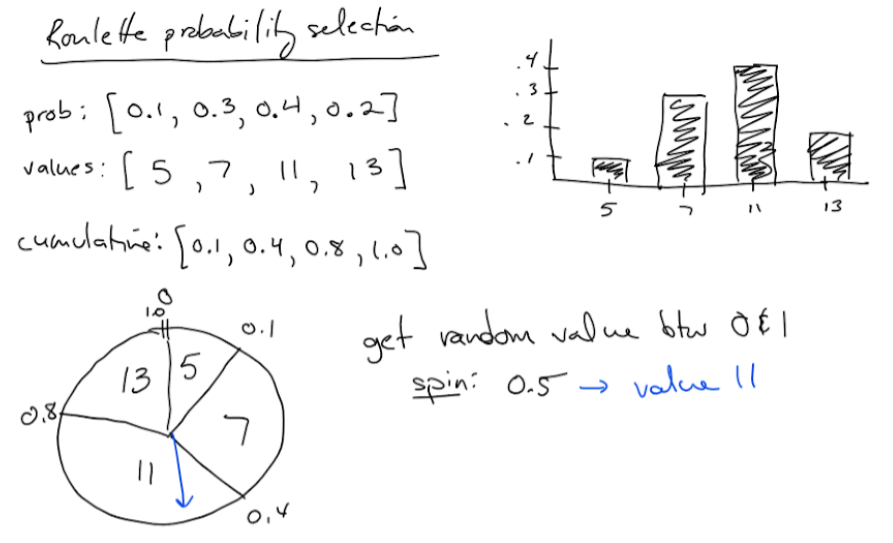

The roulette probability selection algorithm (shown below) assumes that all the probabilities are nonzero. It can fail when one of the probabilities is 0.

Part 2 (20 points)¶

Quick Answer (2.5 points each). You don't have to show any work. Just state the final answer.

K-means can be interpreted as an optimization algorithm that finds the best grouping of records into clusters to minimize some objective function. What is the objective function?

Suppose that while carrying out backwards selection, you notice that when feature A is removed from the data set, the training accuracy increases by 3% but the testing accuracy decreases by 2%. Should you keep feature A or remove it permanently?

What are the z-scores for the list

[1, 2, 3]?Give a concrete example in which a k-nearest neighbors model would perform poorly if the data is not normalized beforehand. Explain why normalizing the data would help the model perform better.

Consider the following words/phrases: flexible, memorize, inflexible, paranoid, high training accuracy, high-degree polynomial, k-nearest neighbors with high value of k.

Which of these words/phrases are associated with overfitting? Which are associated with underfitting?

Suppose that you have a data set of features $A$ and $B$ and target variable $C,$ and you wish to predict $C$ using a linear or logistic regression. You notice that when $A$ is high and $B$ is low, then $C$ is low, and when $B$ is high and $A$ is low, then $C$ is also low. But when $A$ and $B$ are both high, then $C$ is high. What should do you do to the data before you fit the model?

Suppose you're trying to predict the survival probability of patients undergoing surgery using logistic regression. For many patients, you have data about the health of the patient and the context of the surgery, along with whether or not the patient survived the surgery. What technique should you use to fit the logistic regression?

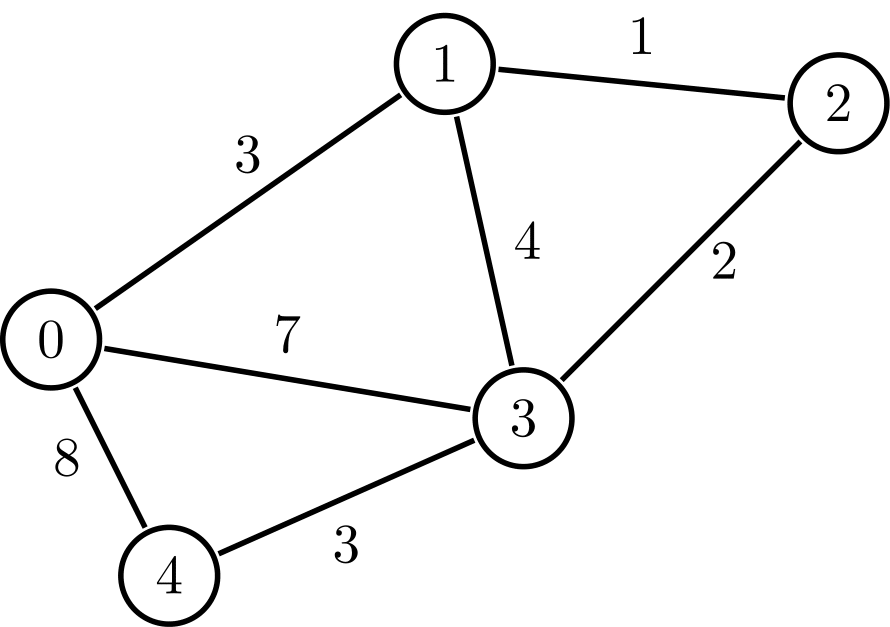

Carry out Dijkstra's algorithm for the following graph, starting with node 0, and continuing until the scores of all the nodes have been finalized. What are the final scores of the nodes?

Part 3 (60 points)¶

Write the following programs. SUBMIT YOUR REPL.IT LINK ALONG WITH A SCREENSHOT OF YOUR CODE AND THE TERMINAL OUTPUT AFTER YOUR RUN IT.

Note: You are free to use numpy, pandas, and scikit-learn in these problems.

(20 points) Write a) a haskell program AND b) a C++ program that print out the sum of the first $N$ perfect cubes. Test your code on the case of $N=10.$ The result should be $3025.$ (You're writing two different programs in two different languages that accomplish the same task.)

(20 points) Write a python program that fits the function $y=a\sin(bx)$ to the data points

[(0.5,2), (0.9,0.9), (1,0.3)]by minimizing the residual sum squares using gradient descent, using the initial guessa=1, b=1. Have your program periodically print out the parameters and the residual sum squares. Choose the step size (in your gradient estimation) and learning rate (in your parameter updates) so that the parameters converge quickly.(20 points) The following data set contains information about some physical characteristics of flowers. There are 3 types of flowers represented in the data set, "Iris-setosa," "Iris-versicolor," and "Iris-virginica."

-

a. (5 points) For each flower, state the mean sepal length, sepal width, petal length, and petal width. (Put this in your Canvas submission.)

b. (15 points) Create a k-nearest-neighbors model that uses the sepal length, sepal width, petal length, and petal width to predict the type of flower. Normalize your data using max-min scaling, split your data into a training half and a testing half, find the optimal value of $k$ (the value that maximizes the testing accuracy), and report the testing accuracy using the optimal value of $k.$

Watch out! The data set is ordered, so be sure that when you split your data into a training half and a testing half, you shuffle the data beforehand. You can do that using

df = df.sample(frac=1).reset_index(drop=True). Otherwise, your training half might have all one type of flower and the testing half might have only the other type of flower.

Quiz 2-6¶

Problem 1 (7 points)¶

a. (1pt) What is the point of feature selection? Why do we care about it; what good does it do for our models?

b. (2pt) Which is more computationally expensive, forward selection or backward selection, and why?

c. (2pt) Suppose that with features A, B, and C, we have

training accuracy = 0.81

testing accuracy = 0.75Then, when feature D is added, we have

training accuracy = 0.80

testing accuracy = 0.76Following the forward selection procedure, should we keep feature D? Why or why not?

d. (2pt) Suppose that with features W, X, Y, and Z, we have

training accuracy = 0.81

testing accuracy = 0.75Then, when feature W is removed, we have

training accuracy = 0.80

testing accuracy = 0.76Following the backward selection procedure, should we keep feature W? Why or why not?

Problem 2 (7 points)¶

This question deals with the following data set, in which each row represents a student in a company's data science training course. The target variable (aptly named "target") is 1 if the student was looking to change jobs after the course.

The data dictionary is here:

enrollee_id : Unique ID for candidate

city: City code

city_ development _index : Developement index of the city (scaled)

gender: Gender of candidate

relevent_experience: Relevant experience of candidate

enrolled_university: Type of University course enrolled if any

education_level: Education level of candidate

major_discipline :Education major discipline of candidate

experience: Candidate total experience in years

company_size: No of employees in current employer's company

company_type : Type of current employer

lastnewjob: Difference in years between previous job and current job

training_hours: training hours completed

target: 0 – Not looking for job change, 1 – Looking for a job changeYou can use pandas and numpy. Feel free to use Google to look up any documentation.

In addition to answering these questions on the quiz, also include a link to your code.

a. (1pt) What was the mean number of training hours?

b. (1pt) What percent of students were looking to change jobs after the course?

c. (1pt) Which city ID had the most students?

d. (1pt) How many students did that city have?

e. (1pt) What is the highest city ID?

f. (1pt) How many students in the data set came from companies with fewer than 10 employees?

g. (1pt) How many students in the data set came from companies with fewer than 100 employees?

Problem 3 (7 points)¶

The following questions are based on the videos assigned by Prof. Wierman:

Thomas Vidick https://www.youtube.com/watch?v=Cwz_tMjzavc

a. (1pt) Describe the difference between bits and qubits.

b. (1pt) What word is used to describe correlations in quantum states?

c. (1pt) What notable thing did Peter Shor do in the context of quantum computing?

d. (1pt) How does the classical factoring algorithm work?

e. (1pt) What is the time complexity of the classical factoring algorithm, and what is the time complexity of the Shor's algorithm?? (linear? logarithmic? polynomial? exponential? factorial?)

f. (1pt) How can you tell if you're on a torus or a sphere, if you can only see your local neighborhood?

g. (1pt) What specific type of particle was referenced as being involved in the topological manipulation of quantum information?

Quiz 2-5¶

Problem 1 (10 points)¶

This question deals with logistic regression (pseudoinverse vs gradient descent). It's possible to get partial credit if you show your work.

Suppose you're given the dataset [(2,1), (3,0)] and you want to fit a logistic regression model $y=\dfrac{1}{1+e^{a+bx}}.$

a. (2pts) Why is it necessary to use gradient descent in this scenario? What would happen if we tried to use the pseudoinverse?

b. (1pt) Starting with initial values $a=1$ and $b=1,$ what is the RSS?

c. (3pts) What are the numerical estimates for the gradients $\dfrac{\partial (RSS)}{\partial a}$ and $\dfrac{\partial (RSS)}{\partial b}$ using a central difference quotient with step size $\delta = 0.1?$

d. (1pt) If you use a learning rate of $\alpha = 0.2,$ what are the updated values of $a$ and $b?$

e. (1pt) What is the new RSS?

f. (2pts) In general, if we run gradient descent for infinitely many iterations, is it guaranteed to give us the best-fitting model? Why or why not?

Problem 2 (7 points)¶

This question deals with the following data set:

https://www.kaggle.com/spscientist/students-performance-in-exams

You can use pandas, numpy, and sklearn. Feel free to use Google to look up any documentation.

Create a file in repl.it that prints out the answers to the following questions. Copy and paste those answers into your quiz submission, and also paste in the link to your repl.it code.

a. (1pt) What are the math scores in the last 3 rows of the data?

b. (1pt) What is the average math score across all students?

c. (1pt) What were the average math scores for students who did vs didn't complete the test preparation course?

d. (1pt) How many categories of parental level of education are there?

e. (3pts) Create dummy variables for test preparation course and parental level of education. Then, fit a linear regression to all the data except for the last 3 rows, and use it to predict the math scores in the last 3 rows of the data. What scores do you get?

Quiz 2-4¶

Date: 3/12

Time: 60 min

Topics: C++, SQL, Neural Nets

Allowed / prohibited resources: This quiz is open-book. You can refer to examples/solutions from previous assignments. But you cannot copy code from the internet nor discuss with classmates. (If you do either of these two things, it will probably show in your code, and you'll get a 0 -- so don't chance it.)

Submission: On this quiz, you'll need to write functions in C++ and Haskell. Write these functions in repl.it, turn in your repl.it links, and also copy/paste your actual repl.it code into the submission box as evidence that your code hasn't been modified since submitting the quiz.

Problem 1 (C++; 33% of grade)¶

Complete the C++ template below.

# include <iostream>

# include <cassert>

// write your function calcSum here

int main() {

std::cout << "Testing...\n";

int matrix[2][3] = {

{ 1, 2, 3 },

{ 4, 5, 6 }

};

int numRows = 2;

int numCols = 3;

// calculate numRows and numCols using a general method.

// DO NOT just set numRows = 2 and numCols = 3.

// hint: use the size of the variable

assert(numRows == 2);

assert(numCols == 3);

int rowSum[numCols];

calcSum(matrix[0], matrix[1], rowSum, numCols);

assert(rowSum[0] == 5);

assert(rowSum[1] == 7);

assert(rowSum[2] == 9);

std::cout << "Success!";

return 0;

}Problem 2 (SQL; 33% of grade)¶

On sqltest.net, copy the following table in:

https://raw.githubusercontent.com/eurisko-us/eurisko-us.github.io/master/files/sql-tables/3.sql

Find Ishmael Smith's average grades in his classes. Order the results from lowest grade to highest grade. As usual, submit the link to your query, and make sure you're using MySQL.

subject avgScore

Math 57.5000

English 80.0000

Science 85.0000Problem 3 (Neural Net; 33% of grade)¶

Suppose that you're given a neural network whose neurons are connected in the following way:

$$ \begin{matrix} & & n_5 \\ & \nearrow & & \nwarrow \\ n_3 & & & & n_4 \\ & \nwarrow & & \nearrow & & \nwarrow \\ & & n_1 & & & & n_2 \\ & & & \nwarrow & & \nearrow \\ & & & & n_0 & & \end{matrix} $$Find $\dfrac{\partial E}{\partial w_{01}}$ under the following conditions:

$y_\textrm{actual}=1$

$a_k=k+11$ for all $k$ (meaning $a_0=11, a_1=12, \ldots$)

$f'_k(i_k) = k+1$ for all $k$ (meaning $f'_0(i_0)=1, f'_1(i_1)=2, \ldots$)

$w_{ab} = a+b$ for all $a,b$ (meaning $w_{24} = 2 + 4 = 6,$ for example)

Note: You don't have to show all your work, but if you want the possibility of partial credit in case you get it wrong, show a couple steps along the way.

Quiz 2-3¶

Date: 2/26

Time: 60 min

Topics: C++, Haskell, SQL, Neural Nets

Allowed / prohibited resources: This quiz is open-book. You can refer to examples/solutions from previous assignments. But you cannot copy code from the internet nor discuss with classmates. (If you do either of these two things, it will probably show in your code, and you'll get a 0 -- so don't chance it.)

Submission: On this quiz, you'll need to write functions in C++ and Haskell. Write these functions in repl.it, turn in your repl.it links, and also copy/paste your actual repl.it code into the submission box as evidence that your code hasn't been modified since submitting the quiz.

Problem 1 (C++; 25% of grade)¶

Write a C++ function calcIndex(n) to find the index of the first Fibonacci number that is greater than an input number $n.$

Recall that the Fibonacci numbers are

$$a_0=0, \, a_1=1, \, a_2=1, \, a_3=2, \, a_4=3, \, a_5=5, \, a_6=8, \, a_7 = 13, \ldots$$(every number is the sum of the previous two).

For example, if $n=8,$ then we'd have calcIndex(8)=7 because $a_7=13$ is the first Fibonacci number that is greater than $n=8.$

Make your code efficient. DON'T re-compute all the preceding values of the Fibonacci sequence each time you need to get the next values.

# include <iostream>

# include <cassert>

// write your function calcIndex here

int main()

{

std::cout << "Testing...\n";

assert(calcIndex(8)==7);

assert(calcIndex(100000)==26);

std::cout << "Success!";

return 0;

}Problem 2 (Haskell; 25% of grade)¶

Write a Haskell function calcGPA that takes a list of letter grades (A, B, C, D, F) and returns the GPA. The conversion is A=4, B=3, C=2, D=1, F=0.

Test your function with the following test-case:

>>> calcGPA(["A", "B", "B", "C", "C", "C", "D", "F"])

2.125

Here, the underlying computation is (4+3+3+2+2+2+1+0)/8Problem 3 (SQL; 25% of grade)¶

On sqltest.net, given the following tables, write a query that gives (first name, last name, age, grade) for all students who got a grade of C or better. Sort the results by grade (highest grade first).

CREATE TABLE age (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

lastname VARCHAR(30),

age VARCHAR(30)

);

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('1', 'Walton', '12');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('2', 'Sanchez', '13');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('3', 'Ng', '14');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('4', 'Smith', '15');

INSERT INTO `age` (`id`, `lastname`, `age`)

VALUES ('5', 'Shenko', '16');

CREATE TABLE name (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

firstname VARCHAR(30),

lastname VARCHAR(30)

);

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('1', 'Franklin', 'Walton');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('2', 'Sylvia', 'Sanchez');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('3', 'Harry', 'Ng');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('4', 'Ishmael', 'Smith');

INSERT INTO `name` (`id`, `firstname`, `lastname`)

VALUES ('5', 'Kinga', 'Shenko');

CREATE TABLE grade (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

firstname VARCHAR(30),

grade VARCHAR(30)

);

INSERT INTO `grade` (`id`, `firstname`, `grade`)

VALUES ('1', 'Franklin', 'A');

INSERT INTO `grade` (`id`, `firstname`, `grade`)

VALUES ('2', 'Sylvia', 'C');

INSERT INTO `grade` (`id`, `firstname`, `grade`)

VALUES ('3', 'Harry', 'D');

INSERT INTO `grade` (`id`, `firstname`, `grade`)

VALUES ('4', 'Ishmael', 'F');

INSERT INTO `grade` (`id`, `firstname`, `grade`)

VALUES ('5', 'Kinga', 'B');Problem 4 (Neural Net; 25% of grade)¶

Suppose that you're given a neural network whose neurons are connected in the following way:

$$ \begin{matrix} & & n_3 \\ & \nearrow & & \nwarrow \\ n_1 & & & & n_2 \\ & \nwarrow & & \nearrow \\ & & n_0 \\ \end{matrix} $$Assume the following activation functions:

$$\begin{align*} f_0(x)&=x+1 \\ f_1(x) &= x+2 \\ f_2(x) &= x \\ f_3(x) &= 2x \end{align*}$$Assume the following weights:

$$\begin{align*} w_{01} &= 1 \\ w_{02} &= 2 \\ w_{13} &= 3 \\ w_{23} &= 4 \end{align*}$$Your task: Compute $a_3$ (the activity of $n_3$) if the input given to $n_0$ is $i_0=2.$

Note: You don't have to show all your work, but if you want the possibility of partial credit in case you get it wrong, show a couple steps along the way.

Quiz 2-2¶

Date: 2/11

Time: 45 min

Topics: C++, Haskell

Allowed / prohibited resources: This quiz is open-book. You can refer to examples/solutions from previous assignments. But you cannot copy code from the internet nor discuss with classmates. (If you do either of these two things, it will probably show in your code, and you'll get a 0 -- so don't chance it.)

Submission: On this quiz, you'll need to write functions in C++ and Haskell. Write these functions in repl.it, turn in your repl.it links, and also copy/paste your actual repl.it code into the submission box as evidence that your code hasn't been modified since submitting the quiz.

Problem 1 (Haskell; 33% of grade)¶

Write a function sumFactors that finds the sum of all the factors of the input number (which you can assume is a positive integer).

- In Haskell, the modulo operator is

a `mod` b

Here is a code template:

-- your function(s) here

main = print(sumFactors 10) -- should come out to 1+2+5+10 = 18Problem 2 (C++; 66% of grade)¶

Consider the recursive sequence $$ a_{n} = a_{n-1} + 2a_{n-2}, \quad a_0=0, a_1=1. $$

The first few terms are

[a0,a1,a2,a3,a4,a5,a6,a7,a8]

[0, 1, 1, 3, 5, 11,21,43,85]Important: A code template is provided at the bottom of the problem.

a. Write a C++ function seqSum to find the sum of the first $N$ terms without repeating any computations.

You'll need to use an array

Examples below:

seqSum(0) = a_0 = 0

seqSum(3) = a_0 + a_1 + a_2 + a_3

= 0 + 1 + 1 + 3

= 5

seqSum(8) = a_0 + a_1 + a_2 + a_3 + a_4 + a_5 + a_6 + a_7 + a_8

= 0 + 1 + 1 + 3 + 5 + 11 + 21 + 43 + 85

= 170b. Write a C++ function extendedSeqSum that finds the sum of the first $N$ terms, and then finds the sum of THAT many terms without repeating any computations.

You can copy your code from

seqSumand then add more onto it.Examples below:

extendedSeqSum(2) = 1

a_2 = 1, so compute the sum through a_1

a_0 + a_1

= 0 + 1

= 1

extendedSeqSum(4) = 21

a_4 = 5, so compute the sum through a_5

a_0 + a_1 + a_2 + a_3 + a_4 + a_5

= 0 + 1 + 1 + 3 + 5 + 11

= 21C++ Template

# include <iostream>

# include <cassert>

// write your functions seqSum and extendedSeqSum here

int main()

{

std::cout << "Testing...\n";

assert(seqSum(0)==0);

assert(seqSum(3)==5);

assert(seqSum(8)==170);

assert(extendedSeqSum(2)==1);

assert(extendedSeqSum(4)==21);

std::cout << "Success!";

return 0;

}Quiz 2-1¶

Date: 1/29

Time: 45 min

Topics: Linear regression, logistic regression, k nearest neighbors, naive bayes, and Gini decision trees, overfitting, underfitting, training datasets, testing datasets, train-test splits.

Question 1¶

Fill in the blanks. Fill in blanks [A1] and [A2] with some parameter of the model, blanks [B1] and [B2] with "high" or "low", and blanks [C1] and [C2] with "overfit" or "underfit". Then, justify your answer.

a. In a polynomial regression model, if you set [A1] too [B1], then the model would [C1] because $\_\_\_\_\_.$

b. In a k nearest neighbors model, if you set [A2] too [B2], then the model would [C2] because $\_\_\_\_\_.$

Question 2¶

Suppose you fit a Gini decision tree on the dataset $$ D = \Big[ (x,y,\textrm{class}) \, \Big| \, x,y \in \mathbb{Z}, \,\, xy \neq 0, \,\, x,y \in [-5,5] \Big] $$ where $$ \textrm{class} = \begin{cases} \textrm{small}, \quad x,y \in [-2,2] \\ \textrm{big}, \quad \textrm{otherwise}. \end{cases} $$ How many splits would there be? Justify your answer. (Tip: sketch out the dataset and the splits)

Question 3¶

Suppose you have a dataset consisting of 100 records. Which of the following pairs of training/testing datasets would lead to overfitting?

I. train on records 1-60, test on records 61-100

II. train on records 1-50, test on records 41-100

III. train on records 1-40, test on records 61-100

IV. train on records 1-20, test on records 41-100

V. train on records 1-80, test on records 81-100

Question 4¶

For each of the following modeling scenarios, you're given a dependent variable that you want to predict, and you need to say what data you'd want to collect and what model you'd want to use.

Give 3 different independent variables for which you'd want to collect data.

Say what model you'd use (linear, polynomial, logistic, k nearest neighbors, naive Bayes, decision tree). Justify why you chose the model you did.

a. Predicting a sports team's probability of winning or losing a game. (You can choose the sport.)

b. Predicting the number of views on a YouTube video during the first week.

c. Predicting whether a patient at the hospital will live or die.

Question 5 (Bonus)¶

Suppose you fit a decision tree on the dataset $$ D = \Big[ (x,y,z,\textrm{class}) \, \Big| \, x,y,z \in \mathbb{Z}, \,\, xyz \neq 0, \,\, x,y,z \in [-5,5] \Big] $$ where $$ \textrm{class} = \begin{cases} \textrm{small}, \quad x,y,z \in [-2,2] \\ \textrm{big}, \quad \textrm{otherwise}. \end{cases} $$ How many splits would there be? Justify your answer.

Final 1¶

Date: 12/17

Time: 120 min

SUPER IMPORTANT: There are 64 short questions on this final. Most of these questions should take no more than 30 seconds to a minute if you know what you're doing. To ensure that you maximize the number of questions you're able to answer, SKIP ANY QUESTIONS THAT AREN'T IMMEDIATELY CLEAR TO YOU and come back to them only after you've gone through all the questions in the test.

Question 1 (30 points)¶

True/False. Determine whether the following statements are true or false. If false, then explain why with a single sentence. If true, then you don't have to write any additional justification.

Two events $A$ and $B$ are independent if $P(A \cap B) = 0.$

Two events $A$ and $B$ are disjoint if $P(A \cup B) = P(A) + P(B).$

If $X \sim \mathcal{U}[a,b],$ then $\textrm E[X] = \dfrac{(b-a)^2}{12}.$

In general, $\textrm{E}[X + Y] = \textrm E[X] + \textrm E[Y].$

In general, $\textrm{Var}[X + Y] = \textrm{Var}[X] + \textrm{Var}[Y].$

In general, $\textrm{Var}[X] = \textrm E[X^2] - \textrm E[X]^2.$

Standard deviation is defined as $\textrm{STD}[X] = \sqrt{\textrm{Var}[X]},$ and because you can't take the square root of a negative number, there are some distributions for which the standard deviation is undefined.

For two integers $a$ and $b,$ the expected value of the continuous uniform distribution $\mathcal{U}[a,b]$ is always equal to the expected value of the discrete uniform distribution $\mathcal{U}\{ a, a+1, \ldots, b\}.$

For two integers $a$ and $b,$ the variance of the continuous uniform distribution $\mathcal{U}[a,b]$ is always equal to the expected value of the discrete uniform distribution $\mathcal{U}\{ a, a+1, \ldots, b\}.$

"Probability mass" and "probability density" are two phrases that mean the same thing.

Because $\displaystyle \int_{-1}^3 \dfrac{1}{8} x \, \textrm{d}x = 1,$ the function $f(x) = \dfrac{1}{8} x$ represents a valid probability distribution on the interval $[-1,3].$

Given a matrix $X$ that is taller than it is wide (i.e. more rows than columns), the pseudoinverse of $X$ will always contain fewer entries than $X.$

Let $y = ax+b$ be the linear regression that is fit to a dataset $\{ (x_1, y_1), (x_2, y_2), \ldots, (x_n, y_n) \}.$ Then for all $i,$ we have $y_i = ax_i + b.$

Given two probability distributions $f$ and $g,$ if the KL divergence between $f$ and $g$ is $0,$ then $f=g.$

Fitting a logistic regression is just like fitting a linear regression, except you have to transform the dependent variable first using the formula $y' = \ln \left( \dfrac{1}{y} \right) - 1.$

Given a distribution $f_k(x)$ with an unknown parameter $k,$ and some data points $\{ x_1, x_2, \ldots, x_n \}$ drawn independently from $f_k,$ the likelihood function is given by $$ P(\{ x_1, x_2, \ldots, x_n \} \, | \, k) = f_k(x_1) f_k(x_2) \cdots f_k(x_n). $$

The function $f(x,y) = \dfrac{1}{2}$ is a valid probability distribution for $(x,y) \in [0,2] \times [0,2].$

The prior distribution represents your belief before observing an event, and the posterior distribution represents your belief after observing the event.

The "posterior distribution" is always equal to the likelihood function.

Bayes' rule states that $P( A \, | \, B) = \dfrac{P(A \cap B)}{P(A)}.$

Any time it's possible to compute something recursively, you should do so, because it's always faster.

When you delete a node from a

LinkedList, all you have to do is set the index of the node toNone.If you push 3 elements onto a stack, and then pop off 2 elements, you end up with the last element you pushed.

If the dependent variable is unbounded, then you should use logistic regression.

Linear regression can only be used to fit a linear function to a dataset.

If a model underfits the data, it will perform well on the training dataset but poorly on the testing (validation) dataset.

If a model overfits the data, it will perform well on the training dataset but poorly on the testing (validation) dataset.

Backtracking (intelligent search) just consists avoiding configurations which have duplicate numbers.

For any graph, there is exactly one shortest path from one node to another node.

In the quicksort algorithm, the pivot must be either the first element in the array or the last element in the array.

Question 2 (30 points)¶

Quick Answer. You don't have to show any work. Just state the final answer.

Suppose you have a biased coin that lands on heads two thirds of the time. If you flip the coin 3 times, what is the probability that it lands on heads exactly 2 times?

Consider the exponential distribution $p(x) = 3e^{-3x}.$ What is $\textrm E[X],$ and what is $\textrm{Var}[X]?$

Suppose $P(X \leq x) = 1-e^{-x}.$ What is $P(2 \leq x \leq 3)?$

Find the constant $c$ such that $f(x) = cx^2$ represents a valid probability distribution on the interval $[0,1].$

Find the constant $a$ such that the function $f(x) = e^x$ represents a valid probability distribution on the interval $[0, a].$

Given a matrix $X,$ what is the formula for the pseudoinverse of $X?$

What is the range of the function $\sigma(x) = \dfrac{1}{1+e^{-x}}?$

Suppose the data $\{ 2, 4, 6 \}$ are sampled from the discrete uniform distribution $\mathcal{U}\{1, 2, 3, \ldots, k\}.$ What is the likelihood $P( \{ 2, 4, 6 \} | k)$ evaluated at $k=2?$

Suppose the data $\{ 2, 4, 6 \}$ are sampled from the discrete uniform distribution $\mathcal{U}\{1, 2, 3, \ldots, k\}.$ What is the likelihood $P( \{ 2, 4, 6 \} \, | \, k)$ evaluated at $k=6?$

Suppose a coin has $P(H)=k.$ If the coin is flipped 3 times and the results are $\textrm{HHH},$ then what is the likelihood function $P(\textrm{HHH} \, | \, k)?$

Suppose a coin has $P(H)=k.$ If the coin is flipped 3 times and the results are $\textrm{HHH},$ then what is the posterior distribution $P( k \, | \, \textrm{HHH})?$

Which is faster, determinant using RREF or determinant using cofactors, and why?

Suppose that, when reducing a matrix $A$ to RREF, you divide the first row by 6, multiply the second row by 3, subtract 4 times the first row from the second row, and swap the first and second rows. If the RREF form is equal to the identity matrix, then what is $\det A?$

Consider the binary number $10101.$ What is the equivalent number in decimal form?

Consider the decimal number $25.$ What is the equivalent number in binary form?

Given that $f'(x) = 2x$ and $f(1) = 2,$ estimate $f(1.5)$ using Euler estimation with 1 step.

Using bubble sort (also known as swap sort), how many swaps are required to sort the list

[4, 1, 5, 3]?Using bubble sort (also known as swap sort), what's the maximum number of swaps required to sort a list of 4 elements?

Given the function $f(x)=x^2-1,$ carry out one iteration of the Newton-Raphson method (i.e. the "zero of tangent line" method) starting with the initial guess $x_0 = 2.$ If you were to carry out many more iterations of the Newton-Raphson method, what value of $x$ would you converge to?

Given the function $f(x)=x^2-1,$ carry out one iteration of the gradient descent starting with the initial guess $x_0 = 2$ and learning rate $\alpha = 0.1.$ If you were to carry out many more iterations of the gradient descent, what value of $x$ would you converge to?

Given the function $f(x,y)=x^2y^2 + 2(y-1)^2,$ approximate the minimum value using grid search with $x=0,1$ and $y=0, 1, 2.$

If you push the elements

a,b, andconto aQueuein that order, and then you runQueue.dequeue(), what elements remain in the queue?Suppose you're given the following edges of a tree in the form

(parent, child):[(b,a), (c,d), (e,b), (f,e), (g,c), (h,f), (f,g), (h,b)]. What is the root of the tree?Suppose that 10/15 emails are spam, 7/10 spam emails have misspelled words, 8/10 spam emails have links, 1/5 non-spam emails has misspelled words, and 2/5 non-spam emails have links. Using a naive Bayes model, classify an email containing misspelled words but no links as spam or non-spam.

When using a k-nearest-neighbors model with $k=1,$ does this overfit or underfit the dataset?

When using a k-nearest-neighbors model with $k$ equal to the size of the dataset, does this overfit or underfit the dataset?

Given the recurrence $$ \textrm{time_complexity}(n) = O(1) + \textrm{time_complexity}(n/2), $$ compute the big-O notation of $\textrm{time_complexity}.$

Suppose a node of a decision tree has 3 instances of class A and 2 instances of class B. What is its Gini impurity?

Suppose a node of a decision tree has 3 instances of class A and 2 instances of class B, and it splits into two nodes: one node with 2 instances of class A and 0 instances of class B, and another node with 1 instance of class A and 2 instances of class B. What is the goodness of split?

What is the time complexity (big-O notation) of bisection search (also known as binary search)?

Question 3 (30 points)¶

Complete the code. Copy and paste the given code template, and then fill in the blanks. Don't change the structure of the template.

Multiply two matrices

AandBto yield a new matrixC. You can use the attributesMatrix.num_rowsandMatrix.num_columnsand the methodsMatrix.get_row(row_index)andMatrix.get_column(column_index)(where both of these methods return a single array that is not nested).def dot_product(arr1, arr2): ... ans = 0 ... for i in range(len(arr1)): ... ... ans += ___ ... return ans . def matrix_multiply(A, B): ... C_elements = [[0 for _ in range(___)] for _ in range(___)] ... for col_index in ___: ... ... for row_index in ___: ... ... ... row = ___.get_row(row_index) ... ... ... column = ___.get_column(column_index) ... ... ... ___ = dot_product(row, column) ... return Matrix(C_elements) . Note: here, we're writing matrix_multiply as a function (not a method). To clarify, observe the following test case: . >>> A = Matrix([[1, 0], [0, 2]]) >>> B = Matrix([[3, 1], [0, 4]]) >>> C = matrix_multiply(A, B) >>> C.elements [[3, 1], [0, 8]]Implement merge sort. You can use the

mergefunction above as a helper function.def merge_sort(arr): ... if len(arr) < ___: ... ... return ___ ... halfway_index = ___ ... left_half = ___ ... right_half = ___ ... sorted_left_half = ___ ... sorted_right_half = ___ ... return ___Implement depth-first and breadth-first traversals on a directed graph whose edges are of the form

(parent_index, child_index). Thedepth_first_traversalfunctoin should return the node indices in the order they were visited.def get_children_indices(node_index, edges): ... children_indices = [] ... for ___: ... ... parent_index = ___ ... ... child_index = ___ ... ... if ___: ... ... ... children_indices.append(___) ... return children_indices . def depth_first_traversal(starting_node_index, edges, visited_node_indices = None): ... if visited_node_indices == None: ... ... visited_node_indices = [] ... ___.append(___) ... children_indices = get_children_indices(starting_node_index, edges) ... for ___: ... ... if ___: ... ... ... depth_first_traversal(___, edges, ___) ... return visited_node_indices . def breadth_first_traversal(starting_node_index, edges): ... queue = ___ ... visited_node_indices = [] ... while ___: ... ... queue += ___ ... ... visited_node_indices.append(___) ... ... queue = ___ ... return visited_node_indicesConstruct a system of differential equations to model the spread of a disease in a population of 100 people. Assume that the disease starts with 1 person, and each pair of individuals encounter each other at a rate of once per day, and the chance of disease transmission is 10% on each encounter. Also, assume that infected individuals recover at a rate of 20% per day and die at a rate of 5% per day.

>>> EulerEstimator( derivatives = { 'susceptible': (lambda t,x: _______ ), 'infected': (lambda t,x: _______ ), 'recovered': (lambda t,x: _______ ), 'dead': (lambda t,x: _______ ) }, initial_point = (0, { 'susceptible': ___, 'infected': ___, 'recovered': ___, 'dead': ___ }) )

Quiz 1-6¶

Date: 11/19

Time: 60 min

Topics: Decision Trees, Probability, K-Nearest Neighbors (and percent accuracy curve), Overfitting / Underfitting

Question 1¶

True/False. Determine whether the following statements are true or false. If false, then justify your answer. If true, then you don't have to write any additional justification.

If $A$ and $B$ are independent events, then $P(A \cap B) = 0.$

If $A$ and $B$ are disjoint events, then $P(A \cup B) = 0.$

When we graph

percent_correct(k)vskfor a k-nearest neighbors model, the result is a straight line.When we graph

percent_correct(k)vskfor a k-nearest neighbors model, the best value ofkis the maximum value of the graph.If a model underfits the data, then the model is not flexible enough to fit the given data; whereas if a model overfits the data, then the model fits the data too flexibly.

The best model will perform the best on both the training set and the testing set. If a model underfits, then it will perform poorly on both the training set and the testing set.

If a model overfits, then it will perform poorly on the training set but well on the testing set.

Question 2¶

Quick Computations. Answer the following questions. Show some intermediate step of work (it doesn’t have to be much, but it should be enough for me to tell what approach you’ve taken to solve the problem).

Let $p(n) = c \left( \dfrac{1}{2} \right)^n$ where $n=0, 1, 2, \ldots.$ If $N \sim p(n),$ then what is the value of $c?$

Continued from above: what is the probability that $N$ is greater than $3?$ Give an exact answer (as a fraction).

Suppose $P(X \leq x) = 1-e^{-x}$ if $x \geq 0$ and $0$ otherwise. What is $P(3 < x < 5)?$ Give an exact answer.

On his clarinet, Squidward can play 5 songs well and 10 songs poorly. If he randomly plays 5 songs in a row, what is the probability that he plays all of the songs well? (Assume he plays a different song each time.) Round your answer to 5 decimal places.

Continued from above: what is the probability that Squidward plays exactly 2 of the songs well? Round your answer to 5 decimal places.

If $P(A) = 0.7 $and $P(B) = 0.8$ and $P(A \cup B) = 0.9,$ then what is $P(A \cap B)?$

Suppose that $$ p(x) = \begin{cases} 0.1 & x=1 \\ 0.2 & x=2 \\ 0.3 & x=3 \\ 0.4 & x=4 \, . \end{cases} $$ What is the range of $X?$

Continued from above: Let $Y = (X-2)^2.$ What is the range of $Y?$

Continued from above: What is the distribution $p(y)?$

Suppose that the probability that I go to bed on time is 0.6; the probability that I wake up on time given that I went to bed on time is 0.8, whereas given that I went to bed late it’s 0.3. What's the probability that I wake up on time?

Continued from above: given that I woke up on time, what's the probability that I went to bed on time?

Suppose you have a node at a decision tree with 5 instances of class A and 15 instances of class B. What’s the impurity at this node?

Continued from above: suppose you split the above node into 2 more nodes, one of which has 1 instance of class A and 9 instances of class B. What’s the goodness of split?

Bonus Question¶

Only do these if you've finished the problems above.

Continued from Quick Computation #1: What is the probability that $N$ is a multiple of 5 (including 0)? Give an exact answer (as a fraction).

Two thirds of the time, I’m here. Half of the time, I’m there. But I’m always here or there. What fraction of the time am I both here AND there?

Develop and explain an algorithm that you could use to automate the process of building a decision tree.

Quiz 1-5¶

Date: 10/30

Time: 60 min

Topics: Big-O Recurrence, Variance-Expectation Identity, Covariance, k-Nearest Neighbors, Regression Modeling, Naive Bayes, Hodgkin Huxley

Question 1¶

Determine whether the following statements are true or false. If false, then justify your answer. If true, then you don't have to write any additional justification.

You can use the following identity to compute variance: $\textrm{Var}[X]^2 = \textrm E[X^2] - \textrm E[X]^2.$

Covariance is defined as $\textrm{Cov}[X,Y] = \textrm E[(X- \textrm E[X])(Y- \textrm E[Y])]$

Two random variables $X$ and $Y$ are independent if and only if $\textrm{Cov}[X,Y] = 0$

Covariance is related to variance as follows: $\textrm{Cov}[X,X] = \textrm{Var}(X^2)$

Suppose you have a dataset D consisting of 5 points and classifications, as follows: $D = \{ A(1,2), B(3,4), B(4,5), B(5,5), A(1,1) \}.$ If you classify a point $(0,0)$ using a k-nearest neighbors model with $k=5,$ then the resulting classification will be $A.$

When performing k-nearest neighbors classification, it can be dangerous to choose too large a value of

k, but there is no danger in choosing too small a value ofk.When fitting a logistic regression model to data where the dependent variable is either 0 or 1, it always makes sense to replace 0 with 0.0001 and 1 with 0.9999.

When fitting a regression model to data, it doesn’t matter whether or not you include the bias term (aka the constant term).

In the Hodgkin Huxley model of a neuron, there are 6 different "channels" by which current can exit or enter the neuron.

If a Hodgkin Huxley neuron is left to rest without any external stimulus, its voltage offset will decay to 0.

Question 2¶

Suppose you have a dataset of 10 phone calls, classified as scam or not scam:

Unusual time, different area code → scam

Unusual time, different area code → scam

Unusual time, different area code → scam

Regular time, same area code → scam

Regular time, same area code → scam

Regular time, same area code → not scam

Regular time, same area code → not scam

Regular time, same area code → not scam

Regular time, different area code → not scam

Unusual time, different area code → not scamYou receive a phone call that is at an unusual time, but that has the same area code. Using a naive Bayes model, classify this phone call as scam or not scam. SHOW YOUR WORK!

Question 3¶

Suppose you have a function that satisfies the recurrence

time_complexity(n) = O(1) + time_complexity(n/3).What is the big-O notation for time_complexity(n)? SHOW YOUR WORK!

Quiz 1-4¶

Date: 10/16

Time: 60 min

Topics: Bayesian Inference, Predator-Prey/SIR Models

Question 1¶

Determine whether the following statements are true or false. If false, then justify your answer. If true, then you don't have to write any additional justification.

In a predator-prey model, the decrease in the predator population will always come slightly after the decrease in the prey population.

In a predator-prey model, the increase in the predator population will always come slightly before the increase in the prey population.

In a predator-prey model, the predator population will oscillate at the same frequency as the prey population.

In the SIR model, the acronym SIR stands for Spread, Inoculate, and Recover.

The SIR model assumes that nobody dies from the disease.

In the SIR model, the infected population always falls exactly as quickly as it rises.

Under some choice of parameters, the SIR model could allow for the infected population to reach two separate peaks at different times.

“Likelihood function” is another name for “posterior distribution”.

Suppose that your prior distribution represents your belief that you’re 100% sure that event $X$ will happen. Then, you observe that event X happened. Then your posterior distribution will be the same as your prior distribution.

The “likelihood function” tells you the probability that you should update your prior distribution.

The prior distribution is one’s initial estimate of how probable an event is, and the posterior distribution is the actual true probability of that event.

When you use a uniform prior distribution, you are being “agnostic” in the sense that you’re giving no preference to any outcomes until you observe them.

If two people perform a Bayesian analysis starting with different priors, but they observe the same events, then their likelihood functions will be the same.

If two people perform a Bayesian analysis starting with different priors, but they observe the same events, then their posterior distributions will be the same.

Let X be a random variable on the interval $[0,1]$. If $\textrm{prior}(x) = 2x$ is your prior distribution, and $\textrm{likelihood}(x) = 3x^2$ is your likelihood function, then $\textrm{posterior}(x) = 6x^3$ is your posterior distribution.

Bonus Question¶

Only do this question if you've finished the problems above.

State a shortcoming of the SIR model and explain how you would modify the SIR model to be more realistic. Using your modification, rewrite the system of equations to problem 50-2 and plot the new result. Explain why the plot is more realistic than the one before.

Quiz 1-3¶

Date: 10/2

Time: 60 min

Topics: Euler Estimation, Joint Distributions, Intelligent Search, Linear Regression

Question 1¶

Determine whether the following statements are true or false. If false, then justify your answer. If true, then you don't have to write any additional justification.

Given a differential equation suppose you start at point $(a,b)$ and step forward using Euler estimation to reach a point $(c,d).$ If you now step backward with the same step size, you will return to the point $(a,b),$ the exact same place you started.

If you want a more accurate Euler estimation, you should decrease the step size.

In an Euler estimation, if you decrease the step size by a factor of $N,$ then the number of computations increases by a factor of $N^2.$ In other words, if you halve the step size in an Euler estimation, then there will be quadruple the number of computations.

The Poisson distribution is given by $p(n) = \dfrac{\lambda^n e^{-\lambda} }{n!},$ where $n = 0, 1, 2, 3, \ldots .$ To compute the expected value of the Poisson distribution, you use a sum (not an integral).

Given that $(x_1, x_2, ..., x_N) \sim p,$ computing $\textrm E[X_1]$ requires $N-1$ integrals.

In the Space Empires game, whenever a Player decides to move its ship, it should update the Board directly.

Suppose you are given 100 distinct data points of the form $(x,y)$ that lie on the function $y = ax + b\sin(x) + c(2^x) + d.$ To fit the parameters $a,b,c,d$ of the model, you can use a linear regression.

Suppose you are given 100 distinct data points of the form $(x,y)$ that lie on the function $y = x^a + \sin(bx) + c(2^x) + d.$ To fit the parameters $a,b,c,d$ of the model, you can use a linear regression.

To solve a $3 \times 3$ magic square, you must check $9! = 362880$ possible configurations.

Question 2¶

For the following questions, consider the continuous probability distribution $p(x,y) = x + y$ over the interval $0 < x \leq 1$ and $0 < y \leq 1.$ Also, SHOW YOUR WORK!

Show that $p(x,y)$ is a valid probability distribution.

Let $(X,Y) \sim p.$ Compute $\textrm E[X]$ and $\textrm E[Y].$ (You can use "by similar reasoning..." to justify your answer for $\textrm E[Y].$)

Let $(X,Y) \sim p.$ Compute $\textrm{Var}[X]$ and $\textrm{Var}[Y].$ (You can use "by similar reasoning..." to justify your answer for $\textrm{Var}[Y].$)

Let $(X,Y) \sim p.$ Compute the probability that a randomly selected point $(X,Y)$ lies in the interval $0 < x \leq 0.5$ and $0 < y \leq 1.$

Bonus Question¶

Only do this question if you've finished the problems above.

The points

$$ (2, 27.0154), (3, 64.0912), (4, 159.817) $$lie on a function of the form

$$ y = \ln(x^a) + e^{x + b} + \sqrt{cx + c}. $$Show how this problem can be phrased as a linear regression problem, and then use linear regression to solve for the missing parameters $a, b, c.$

You can use your LinearRegressor class if you'd like. Or, you can use an online matrix inverse/multiplication calculator like Wolfram Alpha or Symbolab. It's possible to get partial credit on this bonus problem even if you don't solve it fully.

Quiz 1-2¶

Date: 9/18

Time: 60 min

Question 1¶

Determine whether the following statements are true or false. Justify your answers either way.

To fit a logistic regression from X to Y, all you have to do is fit a linear regression from X to Y with no additional processing, and then plug the output of the linear regression into a sigmoid function.

Consider the sigmoid function $f(x) = \dfrac{M}{1+e^{-x}}.$ The domain of this function is $(-\infty, \infty)$ and the range of this function is $(0, M).$

To fit a logistic regression using the sigmoid function $f(x) = \dfrac{1}{1+e^{-x}},$ the prediction variable must be transformed as $y \to \ln \left( \dfrac{1}{y} - 1 \right).$

To fit a logistic regression using the sigmoid function $f(x) = \dfrac{M}{1+e^-x},$ the prediction variable must be transformed as $y \to \ln \left( \dfrac{1}{y} - M \right).$

Logistic regressions have about the same computational expense as linear regressions. That is to say, fitting a logistic regression involves about the same number of operations as fitting a linear regression.

Suppose that you use a regression model to predict the rating of a sandwich with 2 slices of roast beef and mayonnaise. If there is a row in your dataset corresponding to 2 slices of beef and mayonnaise, then your regression model will return the rating that was in that row.

A tree is a special case of a directed graph.

When computing the shortest path between two nodes in a graph, you will always visit all nodes in the graph, and it doesn't matter whether the graph is directed or undirected.

The algorithm for computing the shortest path between two nodes in a directed graph is significantly different from the algorithm for computing the shortest path between two nodes in an undirected graph.

Consider the uniform distribution on the interval $[a,b].$ The probability function is given by $p(x) = \dfrac{1}{b-a}$ for $x \in [a,b],$ and $p(x) = 0$ elsewhere.

Consider the uniform distribution on numbers $\{a, a+1, a+2, \ldots, b\}.$ The probability function is given by $p(x) = \dfrac{1}{b-a}$ for $x \in \{a, a+1, a+2, \ldots, b\},$ and $p(x) = 0$ elsewhere.

Suppose that some data is collected, and it is suspected to have come from a distribution with parameter $k.$ The likelihood function $\mathcal{L}(k \, | \, \textrm{data})$ is equal to the probability function of $k,$ provided that the assumption about the data distribution is true.

Question 2¶

Suppose you toss a tetrahedral (4-sided) die 3 times and get the results $\{4, 4, 4\}.$ The die appears to be biased, but how sure can you be?

Let $P(N)$ be the probability that the die lands on the number $N.$ Let $P(4) = k$ and assume that $P(1) = P(2) = P(3).$ Under this assumption, what is the probability distribution $p_k (x)?$ Your answer will be a piecewise function.

Compute the likelihood function $\mathcal{L}(k \, | \, \{4, 4, 4\}).$

Compute the probability distribution $p(k)$ for the parameter $k.$

What is the probability that the die is biased? In other words, what is $P(k > \_\_\_),$ where the blank represents the probability of rolling 4 on an unbiased tetrahedral die?

Question 3¶

Only do this question if you've finished all the questions above.

Continuing the tetrahedral die question... You think the die is biased, but you won't say you're "sure" of it until your chance of being wrong is as low as your chance of being killed by lightning. If the chance of being killed by lightning is about 1 in 6 million, then how many consecutive rolls of 4 would you need in order to be "sure" that the die is biased?

Quiz 1-1¶

Date: 9/4

Time: 60 min

Question 1¶

Consider the following dataset of points $(x,y)\mathbin{:}$

(0,0.1), (1,0.2), (2,0.5)a. Compute a linear regression model $y = ax+b$ by hand. Show your work, and make sure you write down the final model with the coefficients plugged in.

b. Compute a logistic regression model $y = \dfrac{1}{1+e^{ax+b}}$ by hand. Show your work, and make sure you write down the final model with the coefficients plugged in.

Check: Did you show your work? No work = no points.

Question 2¶

Consider the function given by $$ p(x) = \begin{cases} e^{-x} & x \geq 0 \\ 0 & x < 0 \, . \end{cases} $$

a. Is $p(x)$ a valid probability distribution? Why or why not?

b. If $X \sim p,$ then what is $P(0 < X <= 2)?$ Show your work.

c. What is $\textrm E[X]?$ Show your work.

d. What is $\textrm{Var}[X]?$ Show your work.

Check: Did you show your work? No work = no points.